There’s no question that some smartphone cameras are now capable of producing images that can easily rival those taken with a traditional camera. Yet the results from the DXOMARK Insights Portrait Study, held in Paris last summer, showed that there’s one aspect of image quality that continues to challenge many smartphone camera manufacturers: The inconsistency in the perceived image quality when it comes to the rendering of skin tones.

What’s behind this perception and the variation in smartphone performance?

What we discovered

In our previous article (Smartphone portrait photography and skin-tone rendering study: Results and trends), we shared the conclusions of our study. The study was conducted on 405 scenes, and participants included 83 everyday consumers, 30 professional photographers, and 10 image quality experts.

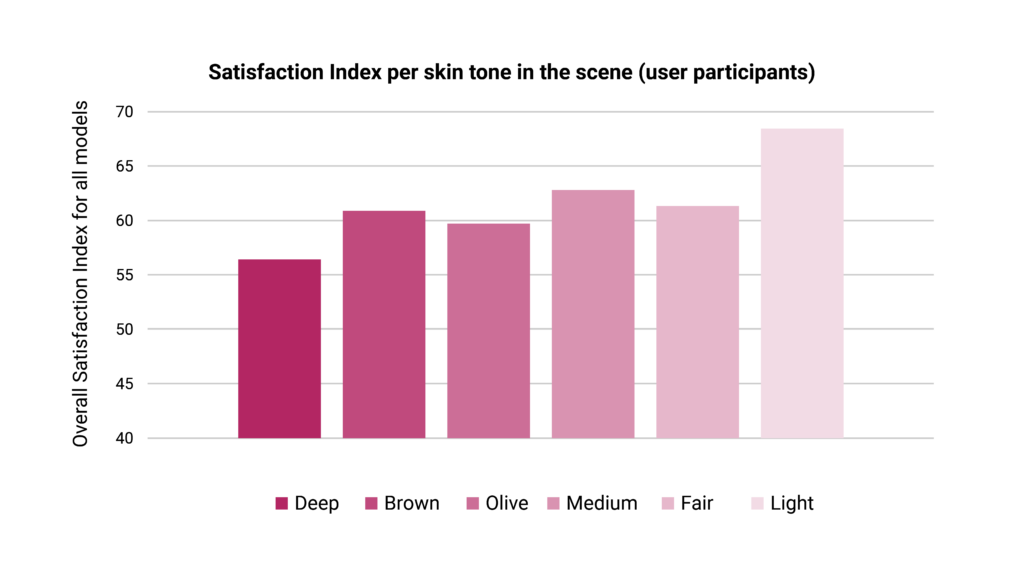

DXOMARK experts developed the Satisfaction Index, a metric that quantifies user preferences when viewing an image and measures the level of satisfaction of respondents. It takes into account several factors and is scored on a scale of 0 to 100, with 0 indicating that the image was rejected by more than 50% of respondents and 100 indicating no rejection at all.

In particular, our study revealed that images containing darker skin tones consistently received a lower satisfaction rating. We discussed this finding in our previous article.

This correlation was not limited to specific groups; it was true for all respondents, regardless of their skin tone or photography skills. Anyone can see the issues with lighting, exposure, and overall color rendering in these pictures. Therefore, smartphones deliver less favorable renderings when the skin tone deviates from light/fair.

Technical bias and image quality

Just because a device has the latest hardware does not guarantee optimal performance. This was clearly evident from our study in which different devices produced widely different results when shooting the same scene containing the same level of difficulty.

We know that many factors play a part in image quality, but what factors affect the rendering of your smartphone images, particularly when it comes to skin tones? There are two technical reasons we can attribute to the decrease in image quality related to skin tone:

- biases resulting from the dataset for the training of the AI model;

- biases resulting from tuning, including manufacturing settings and camera parameters.

This indicates a more significant point to keep in mind: Great image quality does not come from just adding an AI algorithm to a smartphone. The AI algorithms, just like other parameters, also need tight and relevant integration with the hardware.

The technical bias of AI

AI technology has become a popular tool for improving the quality of smartphone images. As part of their design, smartphones now use AI for a range of functions such as noise reduction, video stabilization, white balance adjustment, demosaicing, and more. Our DXOMARK Decodes article on this topic provides examples of the use of AI in smartphone cameras.

Scene detection is one area where AI in today’s smartphones excels and outperforms traditional image quality algorithms. How does it work? With deep learning and semantic segmentation, each element of a scene can be distinguished, and each pixel in an image can be classified into predefined categories, such as a scene or an object (the sky, a building, a person, etc.) What’s more, this analysis is performed in real time.

Through machine learning, smartphone cameras are trained to recognize scenes faster and more accurately and adjust image processing accordingly.

How is AI trained? Using a large set of datasets that specialize in training cameras to segment various real-world elements. Some of these datasets include ADE20K, Cityscapes, and MS Coco Dataset. However, despite these benefits, AI algorithms are trained on specific datasets, which can present biases leading to image quality issues for users.

Coded Bias

Bias in AI algorithms, especially due to limited dataset coverage, is a significant problem.

In 2018, a paper titled “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” from the MIT Media Lab, found that commercially available image gender classification algorithms produce different results depending on the person’s gender and skin tone: “Darker females have the highest error rates for all gender classifiers ranging from 20.8% − 34.7%. For Microsoft and IBM classifiers lighter males are the best classified group with 0.0% and 0.3% error rates respectively. Face++ classifies darker males best with an error rate of 0.7%”.

In 2018, a paper titled “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” from the MIT Media Lab, found that commercially available image gender classification algorithms produce different results depending on the person’s gender and skin tone: “Darker females have the highest error rates for all gender classifiers ranging from 20.8% − 34.7%. For Microsoft and IBM classifiers lighter males are the best classified group with 0.0% and 0.3% error rates respectively. Face++ classifies darker males best with an error rate of 0.7%”.

In 2020, filmmaker and environmental activist Shalini Kantayya released the documentary Coded Bias. The film explores the issue of biased AI algorithms and features the work of MIT media researcher Joy Buolamwini.

When AI systems are applied in real-world scenarios, these biases can lead to unfair treatment and significantly affect the quality of the final image.

Practical insight

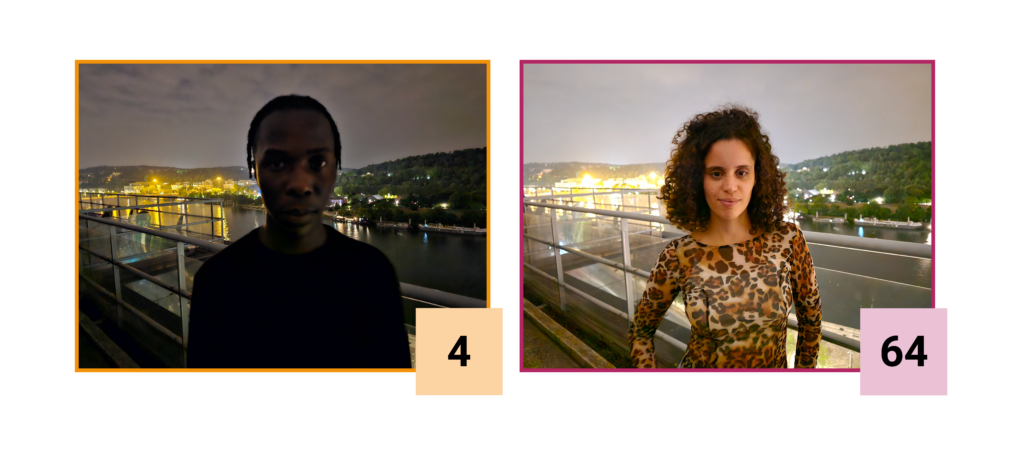

In the example below, the model with a light skin tone appears properly exposed and in focus. However, the model with a darker skin tone is out of focus and has a very low exposure.

The impact on user satisfaction is evident. The image on the right received positive feedback, while the image on the left was strongly rejected, resulting in a satisfaction rating of only 4 out of 100.

The exact cause of the failure is uncertain, but there is a high probability that the camera failed to detect the darker-skinned person. This suggests that the primary issues may lie in scene and content detection.

This scene recognition example illustrates the result of the embedded AI algorithm in our smartphones. However, biases can affect any AI algorithm if the dataset coverage is inadequate, resulting in poorer performance for certain use cases.

The use case above highlights the need for a broader approach to dataset collection, ensuring consistent quality across demographic groups.

The technical bias of tuning

How does tuning impact your smartphone photography rendering?

Smartphone camera development, mostly in Asia and in the United States, is fast-paced, fueled by the regular launches of new generations of smartphones each year.

Each manufacturer tunes its software to address specific issues, such as balancing texture and noise in low-light conditions and controlling blur in portrait mode.

Tuning is also used on displays – for more on this, see DXOMARK Decodes: Software tuning’s pivotal role in display performance.

Tuning strategies

Flagship device manufacturers assemble dedicated engineering teams to meticulously adjust camera settings and image processing pipelines to deliver a rendering that the user is happy with.

The impact of tuning on the final image quality is significant. Users may notice a change in photo and video quality after a software upgrade, ideally for the better. Tuning strategies are influenced by several factors, including:

- Scene illuminance

- Illuminant type

- Dynamic range

- Scene content

Every manufacturer may have a different approach in terms of signature and coverage, but all try to maximize user satisfaction. In applying their own methodologies, manufacturers will make choices that will prioritize certain use cases over others, resulting in variations in renderings among devices.

Practical insight

For example, the image processing pipeline for a night landscape is very different from one designed for a family photo taken in bright light.

Manufacturers strive to address as many user scenarios as possible, but resource constraints, time, and location can limit coverage.

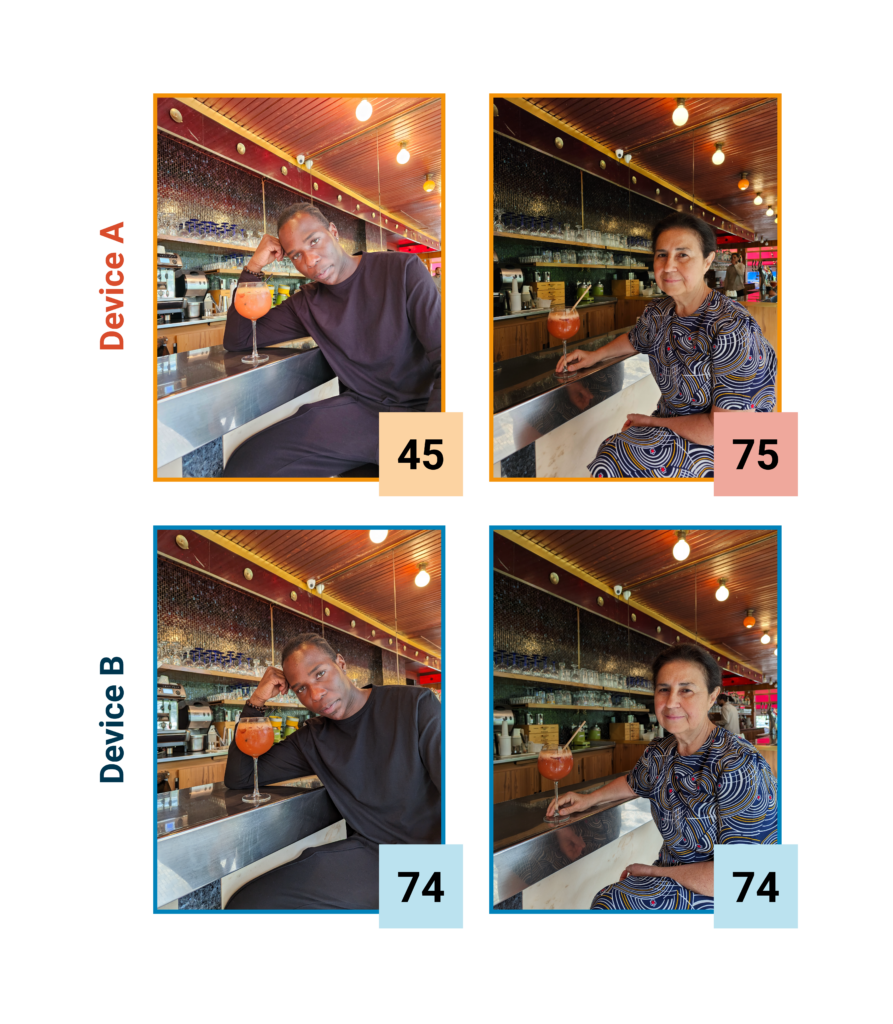

In the Device A vs. Device B comparison below, Device A’s rendering of the light skin tone received a high satisfaction index number (75), but when switching to a darker skin tone, the device significantly increases the overall exposure of the image. Device B, on the other hand, maintains a more balanced exposure adjustment, resulting in a higher overall user satisfaction score.

While it’s difficult to tune a camera for all use cases and for all skin tones, the discrepancies below help to show some of the tuning choices that the manufacturers made.

Contrary to what you might think, unappealing images are not always the result of shooting in challenging lighting conditions. While difficult environments present technical challenges, even seemingly straightforward scenes can pose problems if the manufacturer hasn’t adequately covered them.

Practical insight

Occasionally, however, serious failures occur when conditions change slightly. The following example images were taken with the same device. The image on the lower right has a noticeable orange tint, suggesting a white balance problem. Note that the device managed to provide a fairly accurate white balance on the other renderings.

The tuning phase for a device is an extremely complex process, but a short one, during the development process, therefore manufacturers must make their strategic “choices” when they first launch the product.

DXOMARK’s assessment of the situation

In order to improve the current situation, it is crucial to conduct comprehensive testing, which should include:

- a range of use cases, including different settings, shooting conditions, and skin tones;

- a variety of annotations, using a feedback aggregation method and different points of view;

- multiple examples of expected renderings based on quality criteria and user preferences.

DXOMARK Insights conducts in-depth studies to gain a deeper understanding of user preferences and identify failure cases.

What can we learn from Insights?

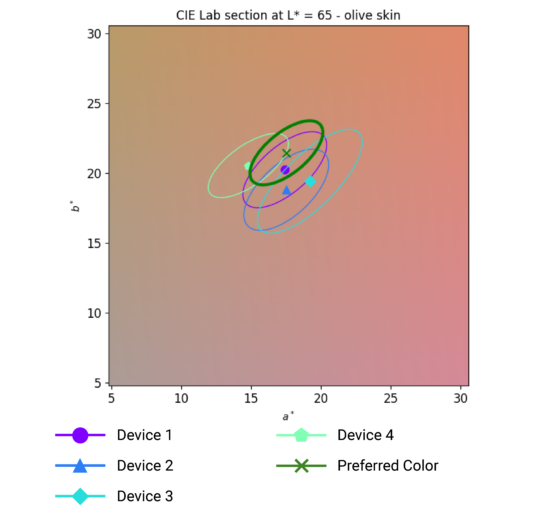

The graph below shows the measured skin color in a reference color space (in outdoor scenes featuring models with an olive skin tone.) Three of the measurements come from three different flagship devices, and one rendering was taken and edited by a professional photographer using a DSLR camera.

If you find this graph complex, don’t worry. There are only two key aspects to understand:

- The center for each device (represented by a circle, triangle, etc.) is the average color obtained in this type of scene.

- The ellipse indicates how consistently the device provides a repeatable rendering. A larger ellipse suggests greater variation in skin tones from one image to another.

The “preferred color” ellipse is obtained with all the color values of the preferred image on a scene. The graph shows that Device 3 produces a reddish skin tone rendering, while Device 4’s rendering is more yellow and both are further away from the ellipse of the preferred color.

Another interesting point is that Device 3 has lower repeatability than the other devices, with significant variation in skin-tone rendering from one scene to another.

This example provides a glimpse into the extensive data collected in the DXOMARK Paris Insights study. Such data is essential for translating users’ preferences into technological requirements.

What’s next?

In summary, quality and customer satisfaction depend on user preferences and camera performance in given use cases, which in turn, depends on the device’s fine-tuning. The impact of new technologies must also not be overlooked because they can induce major changes in user expectations.

In order to further examine whether there are regional preferences and to identify the pain points associated with those preferences, DXOMARK is currently running a comparable survey in other locations across the globe and has recently completed a comprehensive study for India.

Stay tuned for the results of this survey, as it promises to provide valuable insights into the evolving landscape of software tuning and user preferences in different regions.