The early 2020s brought significant changes to our lives, including an increased reliance on laptops for work, education, and entertainment. For example, video calls have become a vital part of many people’s daily routines. However, not all laptops provide the same audio and video quality during these calls.

As another example, laptops have morphed into personal entertainment centers, allowing us to enjoy listening to music and watching movies. But here, too, discrepancies arise. Some laptops offer high-quality audio, while others leave us wanting more. Display color accuracy and brightness also vary, impacting enjoyment.

In this closer look, we will explore DXOMARK’s new laptop test protocol that is designed to thoroughly assess laptop video call and music & video performance. Join us as we delve into some of the intricacies of laptop performance in audio, camera, and display. Our aim is to provide you with valuable insights that will help you find a laptop that meets your requirements.

What we test

This protocol applies to any product intended to be used as a laptop. Multiple form factors are compatible with our tests and ranking, such as clamshell (most laptops), 360°, and 2-in-1 devices.

Testing philosophy

As with our other protocols, our testing philosophy for laptop evaluation is centered on how people use their laptops and the features that are most important to them. We research this information through our own formal surveys and focus groups, in-depth studies of customer preferences conducted by manufacturers, and interviews with imaging and sound professionals.

By knowing consumers’ preferences and needs, we can identify the camera, audio, and display attributes that affect the user experience. This allows us to build a protocol that tests use cases and the attributes using scientific objective measurements and perceptual evaluations.

Use cases

For this initial version of our laptop audiovisual score, we selected two use cases that are representative of both professional and personal laptop use — Video Call and Music & Video.

- Video Call focuses on the ability of the device to show faces clearly and with stability and to capture and render voices in a manner that is pleasant and readily intelligible.

- Music & Video focuses on the quality of the display for videos in all conditions and the quality of the audio playback for both music and videos.

| Video Call | Music & Video | |

| Test scenario description | Using the laptop with its integrated webcam, speakers, microphone(s) and screen for one-to-one and group calls using a video conferencing app. | Using the laptop with its integrated screen and speakers to watch videos or movies or to listen to music. |

| Usual place used | Office, home | Home |

| Some typical applications | Zoom, Microsoft Teams, Google Meet, Tencent VooV, Facetime | YouTube, Netflix, Youku, Spotify, iTunes |

| Consumer pain points | Poor visibility of faces Low dynamic range (webcam) Low intelligibility of voices Poor screen readability in backlit situations |

Poor color fidelity Poor contrast Low audio immersiveness Poor high-volume audio performance Low display readability in lit environments |

Test conditions

AUDIO |

||

| Volume | Low (50 dBA @1m) Medium (60 dBA @1m) High (70 dBA @1m) |

|

| Content | Custom music tracks Custom voice tracks Selected movies |

|

| Apps | Capture: Built-in camera app Playback: Built-in music/video player app Duplex: Zoom |

CAMERA |

||

| Lighting conditions | 5 to 1000 Lux D65, TL83, TL84, LED |

|

| Distances | 30 cm to 1.20 m | |

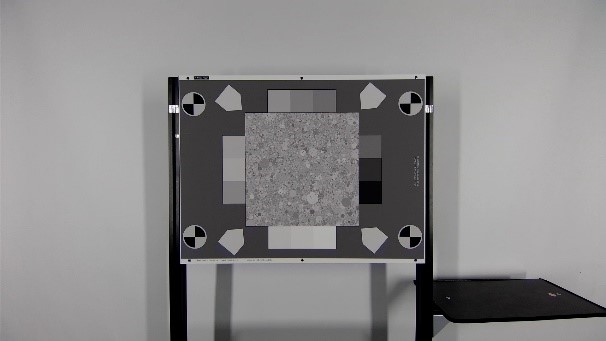

| Charts | DXOMARK test charts | |

| App | Built-in camera app |

DISPLAY |

||

| Lighting conditions | Dark room | |

| Screen brightness | Minimum, 50%, Maximum | |

| Contents | Custom SDR video patterns Custom HDR10 video patterns |

|

| Apps | Built-in video player app |

As we always evaluate objective measurements and perceptual evaluations in the context of an attribute, here are DXOMARK’s definitions of the attributes for the three laptop components that we test — audio, display, and camera.

Audio

For our video call use case, we look at audio capture, handling full duplex situations, and audio playback. Quality audio capture provides good voice intelligibility, a good signal-to-noise ratio (SNR), satisfactory directivity, and good management of audio when the user interacts with the laptop (such as typing during a call.)

Good laptop audio processing can also handle duplex situations — when more than one person is talking at the same time — without echoes or gating, when necessary sounds are lost. For playback, we assess how faithfully sound sources are replicated, how intelligible voices are, how immersive the spatial reproduction is, and how artifacts are controlled, and satisfactory directivity.

We evaluate the following audio attributes (also part of our smartphone Audio protocol):

List of Audio Sub-scores

Timbre describes a device’s ability to render the correct frequency response according to the use case and users’ expectations, taking into account bass, midrange, and treble frequencies, as well as the balance among them. Good tonal balance typically consists of an even distribution of these frequencies according to the reference audio track or original material. We evaluate tonal balance at different volumes depending on the use case. In addition, we look for unwanted resonances and notches in each of the frequency regions as well as for extensions at low- and high-end frequencies.

Dynamics covers a device’s ability to render loudness variations and to convey punch as well as clear attack and bass precision. Sharp musical notes and voice plosives sound blurry and imprecise with loose dynamics rendering, which can hinder the listening experience and voice intelligibility. This is also the case with movies and games, where action segments can easily feel sloppy with improper dynamics rendering. As dynamics information is mostly carried by the envelope of the signal, not only does the attack for a given sound need to be clearly defined for notes to be distinct from each other, but sustain also needs to be rendered accurately to convey the original musical feeling.

In addition, we also assess the signal-to-noise ratio (SNR) in capture evaluation, as it is of the highest importance for good voice intelligibility.

Spatial describes a device’s ability to render a virtual sound scene as realistically as possible. It includes perceived wideness and depth of the sound scene, left/right balance, and localizability of individual sources in a virtual sound field and their perceived distance. Good spatial conveys the feeling of immersion and makes for a better experience whether listening to music or watching movies.

We also evaluate capture directivity to assess the device’s ability to adapt the capture pattern to the test situation.

The volume attribute covers the loudness of both capture and playback (measured objectively), as well as the ability to render both quiet and loud sonic material without defects (evaluated both objectively and perceptually).

An artifact is any accidental or unwanted sound resulting from a device’s design or its tuning, although an artifact can also be caused by user interaction with the device, such as changing the volume level, play/pausing, typing on the keyboard, or simply handling it. Artifacts can also result from a device struggling to handle environmental constraints, such as wind noise during recording use cases.

We group artifacts into two main categories: temporal (e.g., pumping, clicks) and spectral (e.g., distortion, continuous noise, phasing).

Display

A laptop needs to provide users with good readability, no matter the lighting conditions. Its color rendition should be faithful in the SDR color space (and in the HDR color space for HDR-capable devices. Main challenges:

We evaluate the following display attributes (also part of our smartphone Display protocol):

List of Display Sub-scores

From the end-user’s point of view, color rendering refers to how the device manages the hues of each particular color, either by exactly reproducing what’s coded in the file or by tweaking the results to achieve a given signature. For videos, we expect that devices will reproduce the artistic intent of the filmmaker as provided in the metadata. We evaluate the color performance for both SDR (Rec 709 color space) and HDR (BT-2020 color space) video content.

We evaluate minimum and maximum brightness to help us ascertain if a laptop can be used in low light and in bright, backlit environments.

We also evaluate a device’s brightness range, which gives crucial information about its readability under various kinds and levels of ambient lighting. A high maximum brightness allows a user to use the laptop in bright environments (outdoors, for example), and a low minimum brightness will ensure that user can set the brightness according to their preference in a dark environment.

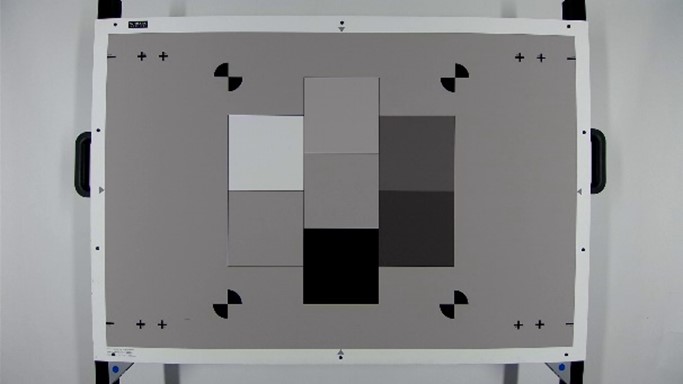

We evaluate maximum contrast using a checkerboard pattern, which also lets us see blooming impacts display performance.

We evaluate the electro-optical transfer function (EOTF), which represents the rendering of details in dark tones, midtones, and highlights. It should be as close as possible to that of the target reference screen but should adapt to bright lighting conditions to ensure that the content is still enjoyable.

We evaluate the uniformity of the laptop display both at maximum and minimum brightness to assess any uniformity defects that would be noticeable to the end-user.

We use a spectrophotometer to evaluate spectral reflectance level on laptop displays when turned off. Additionally, we use a glossmeter to measure the reflectance profile — for example, how diffuse reflectance is. These two measurements are important indicators of laptop readability in bright lighting environments.

Camera

To provide a good end-user experience, a laptop’s built-in camera has to provide a stable image throughout the call, and keep faces in focus and well exposed even in challenging lighting conditions. Viewers should be able to follow facial expressions and mouth movements that are perfectly synchronized with the audio.

We evaluate the following camera attributes (also part of our DSLR Sensor and smartphone Camera and Selfie protocols):

List of Camera Sub-scores

Exposure measures how well the camera adjusts to and captures the brightness of the subject and the background. It relates as much to the correct lighting level of the picture as to the resulting contrast. For this attribute, we also pay special attention to high dynamic range conditions, in which we check the ability of the camera to capture detail from the brightest to the darkest portions of a scene.

The color attribute is a measure of how faithfully the camera reproduces color under a variety of lighting conditions and how pleasing its color rendering is to viewers. As with exposure, good color is important to nearly everyone. Pictures of people benefit greatly from natural and pleasant skin-tone representation.

The texture attribute focuses on how well the camera can preserve small details. This has become especially important because camera vendors have introduced noise reduction techniques that sometimes lower the amount of detail or add motion blur. For some applications, such as videoconferencing in low-bandwidth network conditions, the preservation of tiny details is not essential. But users using their webcam in high-end videoconferencing applications with decent bandwidth will appreciate a good texture performance score.

Texture and noise are two sides of the same coin: improving one often leads to degrading the other. The noise attribute indicates the amount of noise in the overall camera experience. Noise comes from the light of the scene itself, but also from the sensor and the electronics of the camera. In low light, the amount of noise in an image increases rapidly. Some cameras increase the integration time, but poor stability or post-processing can produce images with blurred rendering or loss of texture. Image overprocessing for noise reduction also tends to decrease detail and smooth out the texture of the image.

The artifacts attribute quantifies image defects not covered by the other attributes, caused either by a camera’s lens, sensor, or in-camera processing. These can range from straight lines looking curved or strange multi-colored areas indicating failed demosaicing. In addition, lenses tend to be sharper at the center and less sharp at the edges, which we also measure as part of this sub-score. Other artifacts such as ghosts or halo effects can be a consequence of computational photography.

The focus attribute evaluates how well the camera keeps the subject in focus in varying light conditions and at multiple distances. Most laptops use a fixed-focus lens, though we expect to see autofocus cameras in laptops in the future. Our testing methodology applies to both fixed-focus and autofocus in all tested situations. When several people are at different distances from the camera, a lens design with a shallow depth of field implies that not all people will be in focus. We evaluate the camera’s ability to keep all faces sharp in such situations.

Test environments

Test environments are divided into two parts — lab scenes and natural or real scenes.

| Lab scenes | Real scenes | |

| Location | Audio, Camera & Display labs | Meeting rooms, living room, etc. |

| Main goal | Repeatable conditions | Real-life situations |

| Evaluations | Objective and Perceptual | Perceptual |

Lab setups: repeatable procedures and controlled environments

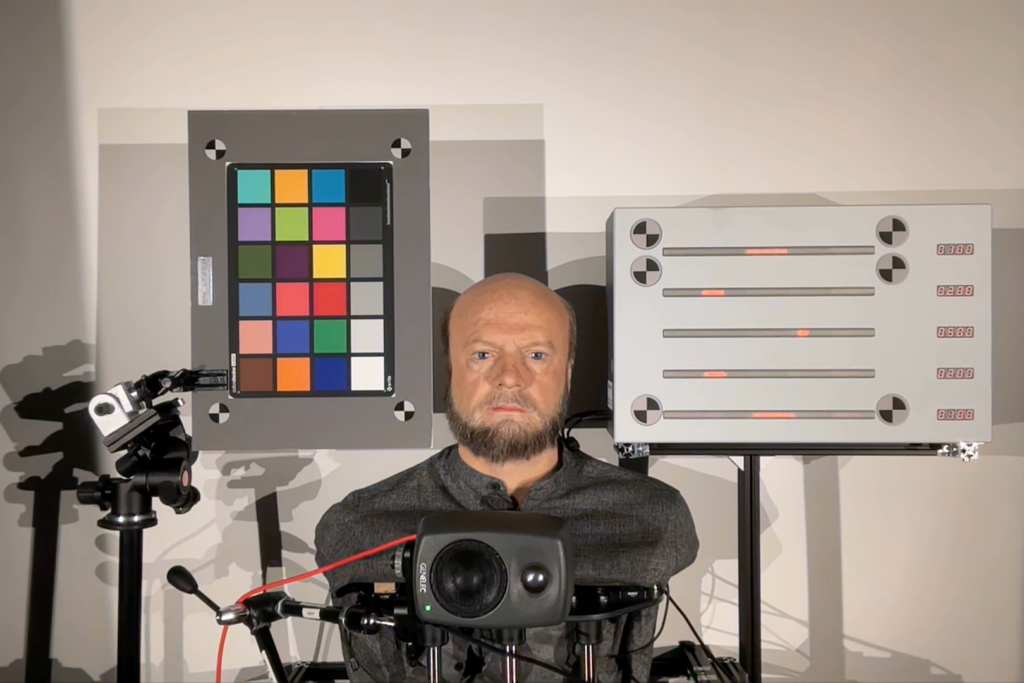

Video call lab

This setup tests the quality of a video-call capture from a single-user perspective for audio and video in multiple lighting conditions.

Items measured and/or evaluated:

- Camera

- Color (color checker)

- Face exposure (realistic mannequin)

- Face details (realistic mannequin)

- Exposure time (Timing box)

- Audio

- Voice capture

- Background noise handling

Test conditions

- Distance: 80cm

- Light conditions: D65, LED TL83,TL84, Tungsten, Mixed 1 to 1000 Lux

Equipment Used

- Image

- Realistic mannequin

- Color checker

- Timing box

- Automated Lighting system

- Audio

- Genelec 8010

- Genelec 8030 (x2)

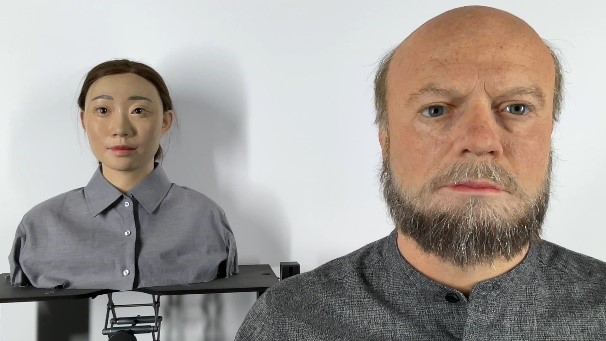

HDR Portrait setup (camera and audio)

This setup tests the quality of video call capture with two users in front of the computer for audio and video in multiple backlit conditions.

Items measured and/or evaluated:

- Camera

- Face exposure

- Face details

- Highlight recovery (entropy)

- White balance

- Skin tones

- Noise & Texture

- Artifacts

- Audio

-

- Voice capture (including spatial)

- Background noise handling

-

Test conditions

- Distance: By framing (FoV dependent)

- Light conditions: 20 lux A, 100 lux TL84, 1000 lux D65

Equipment Used

- Image

- Realistic mannequin (x2)

- HDR Chart

- Automated Lighting system

- Audio

- Genelec 8010 (x2)

- Genelec 8030 (x2)

Depth of field (camera only)

This tests the laptop camera’s ability to keep multiple users who are in front of the camera in focus during a video call at various distances. This involves moving one of the mannequins to the foreground or background to see whether the face remains focused.

Attributes evaluated

- Camera

- Focus / Depth of Field

- Noise

- Artifacts

Test conditions

- Distance: By framing (FoV-dependent)

- Light conditions: D65 1000 lux & 20 lux SME A

Equipment used

- Image

- Realistic manneqin (x2)

- Automated lighting system

DXOMARK Camera charts

- Texture

- Color

- Noise

- Artifacts

- Distance: By framing (FoV-dependent)

- Lighting: D65 1000 lux, TL84 5-1000 lux, LED 1-500 lux

- DXOMARK chart

- Automated lighting system

- Texture

- Noise

- Distance: By framing (FoV-dependent)

- Lighting: D65 1000 lux, TL84 5-1000 lux, LED 1-500 lux

- Dead Leaves chart

- Automated lighting system

Read more about this measurement on this scientific paper: https://corp.dxomark.com/wp-content/uploads/2017/11/Dead_Leaves_Model_EI2010.pdf

- Focus / Depth of field

- Distance: By framing (FoV-dependent)

- Lighting: D65 1000 Lux, TL84 5-1000 Lux, LED 1-500 Lux

- Focus chart

- Automated lighting system

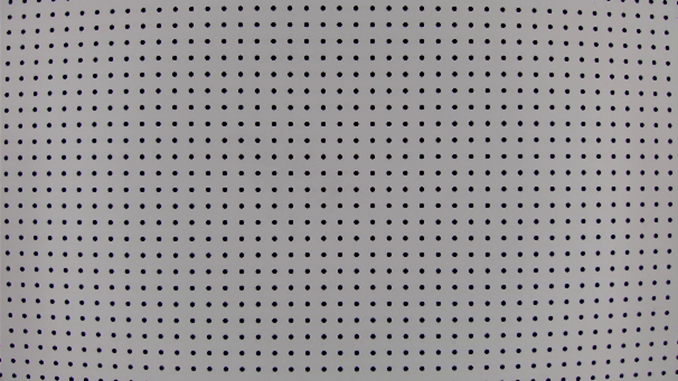

- Noise

- Distance: By framing (FoV-dependent)

- Lighting: D65 1000 Lux, TL84 5-1000 Lux, LED 1-500 Lux

- Dots chart

- Automated lighting system

- Resolution

- Distortion

- Distance: By framing (FoV-dependent)

- Lighting: 1000 lux D65

- Dots chart

- Automated lighting system

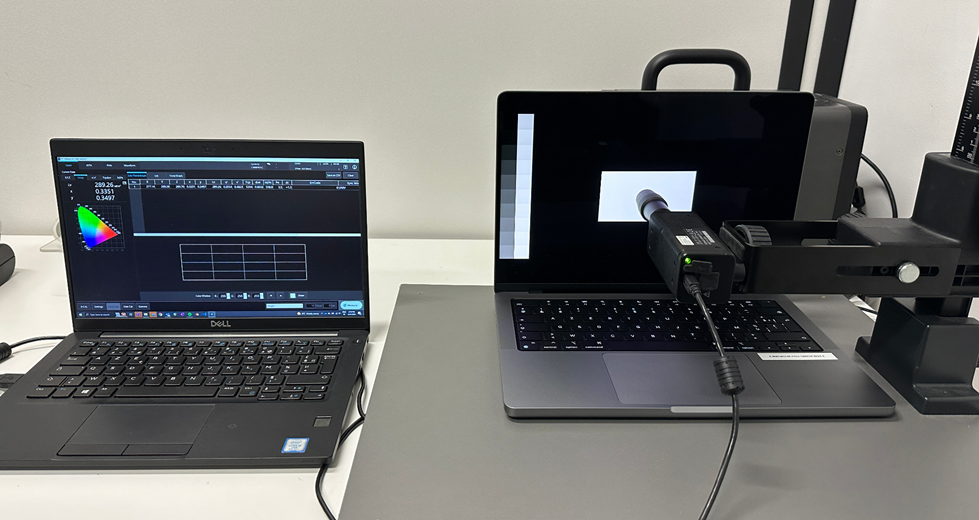

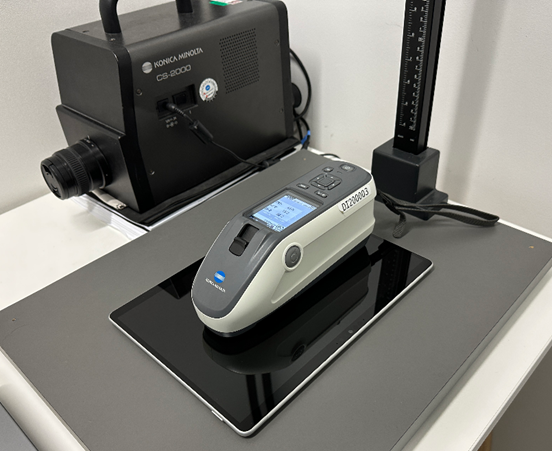

Presentation of Display-testing equipment

Laptop display tests are conducted in low-light conditions only. We also test color and EOTF for both SDR and HDR video. The reflectance and gloss measurements in low-light are sufficient to indicate the display’s performance in brightly lit environments.

Display color analyzer

- Attributes measured

- Display — Readability

- Brightness

- Display — SDR and HDR

- Color gamut and rendering

- EOTF

- Display — Readability

- Equipment used

- Konica Minolta CA410

Spectrophotometer

- Attributes measured

- Display — Readability

- Reflectance

- Display — Readability

- Equipment used

- Konica Minolta CM-25d

Glossmeter

- Attributes measured

- Display – Readability

- Gloss & Haze

- Reflectance profile

- Display – Readability

- Equipment used

- Rhopoint Glossmeter

Presentation of recording lab setups

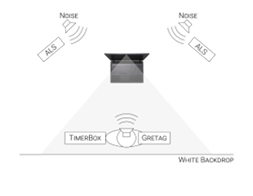

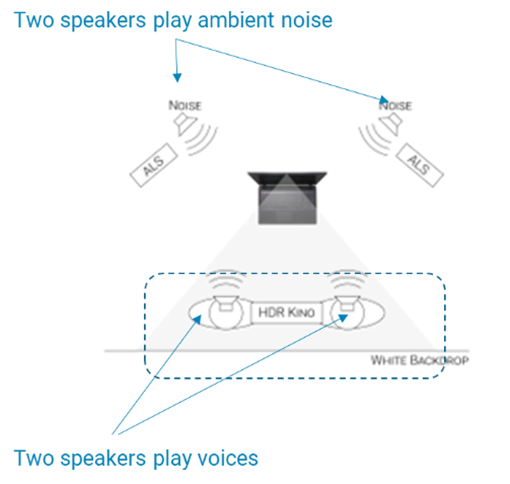

Video-call audio lab

This setup aims at testing the quality of the voices and sounds captured during a video call when multiple people are in the same room. The audio is captured using a popular videoconferencing application.

- Attributes evaluated

- Audio

- Duplex

- Audio

- Equipment used

- Head Acoustics – 3PASS

- Yamaha HS7 (background noise)

- Genelec 8010 (voices)

Semi-anechoic room

This semi-anechoic room setup allows for sound to be captured and measured in optimal audio conditions, free of any reverberations and echoes.

- Attributes measured

- Audio

- Frequency response

- THD+N (distortion)

- Directivity

- Volume

- Audio

- Equipment used

- Genelec 8361

- Earthworks M23R

- Rotating table

Laptop scoring architecture

To better understand consumer laptop preferences and usages, we conducted a survey recently with YouGov that showed laptops were used mostly for web browsing (76%), office work (59%) and streaming video (44%), and listening to music (35%).

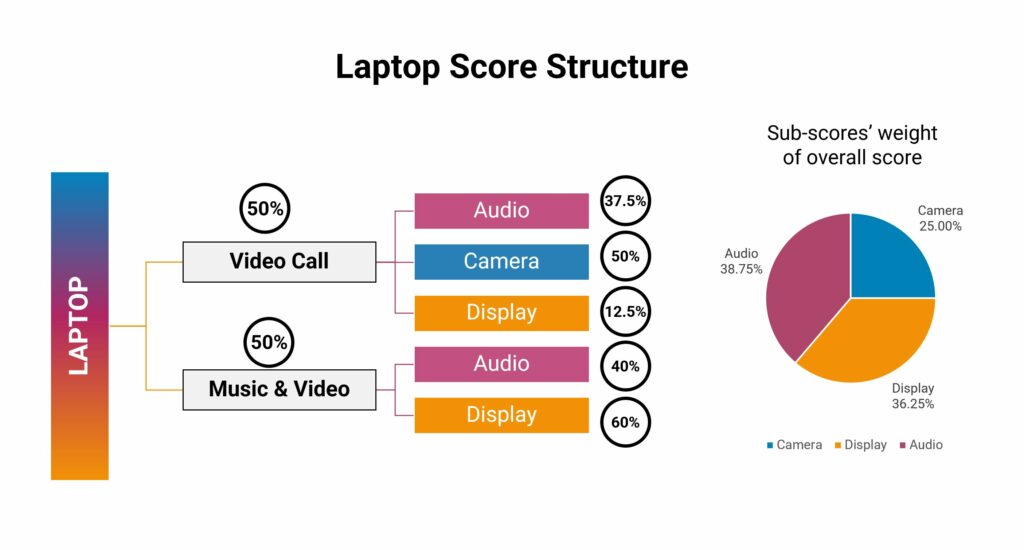

Our laptop overall score combines the equally weighted scores of both the Video Call and Music & Video use cases, which in turn are based on the use case and feature scores for camera, audio, and display.

Use case scores

Camera performance has the highest weight in our calculation of the Video Call score, as it is a major pain point for users right now. (We expect this feature to improve a lot in the coming years, as laptop makers are putting a lot of effort into bringing the quality of built-in cameras closer to that of smartphones and external webcams.) Audio comes next, as a video call cannot happen without it! We evaluate both voice playback and capture, but also “duplex” — situations in which more than one person is speaking at a time, which can cause significant problems in terms of intelligibility. Finally, we assess quality of a display’s readability, as many laptops still do not handle bright situations correctly.

The Music & Video score comprises Display and Audio subscores. Display testing focuses on correct reproduction of colors and tones for both SDR and HDR video and movie contents. Although SDR accounts for most content viewed on a laptop, video streaming platforms are providing more and more HDR content. We evaluate laptops both with and without HDR panels using HDR contents. We apply a penalty to the HDR score for any laptop for having a panel that is not HDR-capable, as that can limit certain usages. We also evaluate audio as part of this use case.

Feature scores

Besides the use-case scores, we calculate general feature scores for Audio, Camera and Display. These scores are representative of the overall performance of the laptop for each individual audiovisual feature, independent of the use case. In practice, the camera score is the same as the video call camera score. For the display feature, we reuse the music and video scores; but for audio, we combine the scores from the Video Call and the Music & Video use cases.

Score structure

We scale the camera scores to have the same impact as audio and display scores in order to keep each feature score relevant for a direct evaluation of perceived quality in our use cases.

Conclusion

Testing a laptop takes about one workweek in different laboratories with up to 20 lab setups.

We hope this article has given you a more detailed idea about some of the scientific equipment and methods we use to test the most important characteristics of your laptop’s video call and music & video performance.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.