DXOMARK first launched the testing of smartphone audio in October 2019 just as smartphone users were recording and consuming more video and audio content on their mobile devices. From listening to music or watching movies to recording concerts or meetings, smartphone audio technology has evolved and so has the way smartphones are used for audio. DXOMARK engineers have kept up with these advancements and have adjusted the Audio protocol to keep it relevant to users. In this article, we’ll take you behind the scenes for an in-depth look at how we test the playback and recording capabilities of smartphones. We’ll look at the methods, the tools, and the use cases that we use to evaluate the quality of audio playback and recording on smartphones.

Test environments

Depending on the protocol, measurements may be done in different environments. While some recordings are purposely made in real-life settings – whether indoor or outdoor, a vast majority of our measurements are conducted under laboratory conditions for greater consistency.

Using a ring of speakers in an acoustically neutral room, our engineers can simulate any environment for recording purposes. Likewise, an acoustically treated room is dedicated to playback perceptual evaluation. Acoustic treatment of these rooms ensures a well-balanced frequency response.

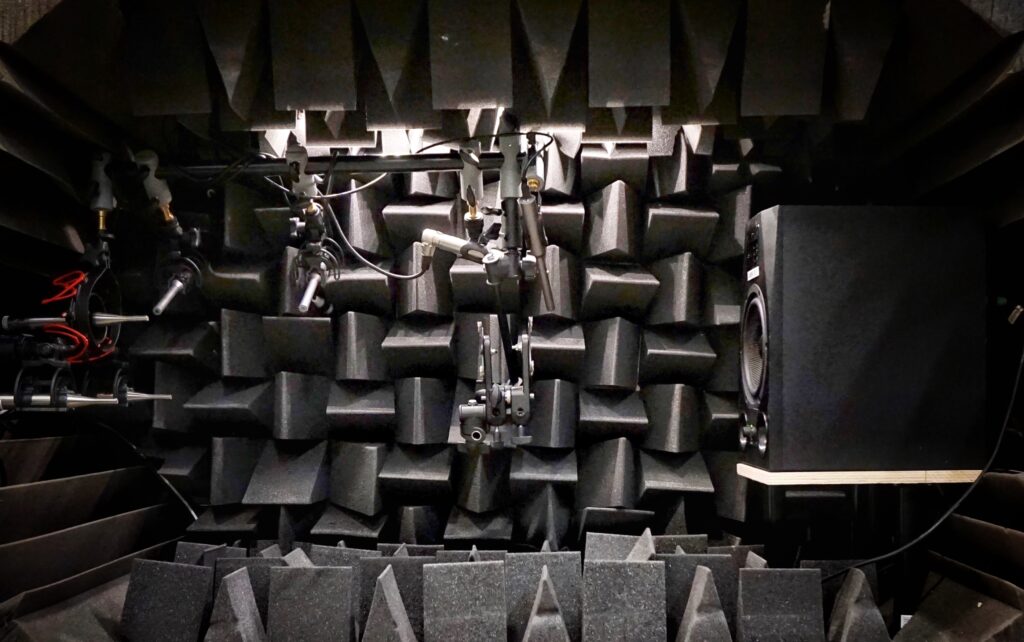

As most objective measurements require very strict conditions, we test our devices in anechoic settings, thanks to a specially designed anechoic box that eliminates most of the sound reflections inside it. Measurements requiring larger setups are made within our custom-made anechoic room, which accommodates a wide range of protocols requiring minimized sound reflections, such as our audio zoom protocol.

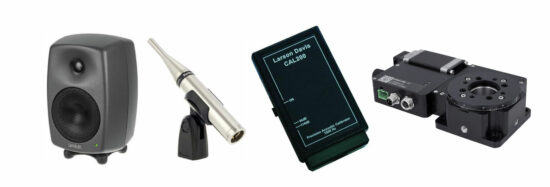

Objective testing tools

The better part of audio testing relies on the ability to both convey and capture sound in a precise manner, hence the importance of using scientific-grade measurement microphones tuned with a sound-level calibrator, as well as carefully optimized loudspeakers to use as sound sources, crucial components of a controlled audio chain.

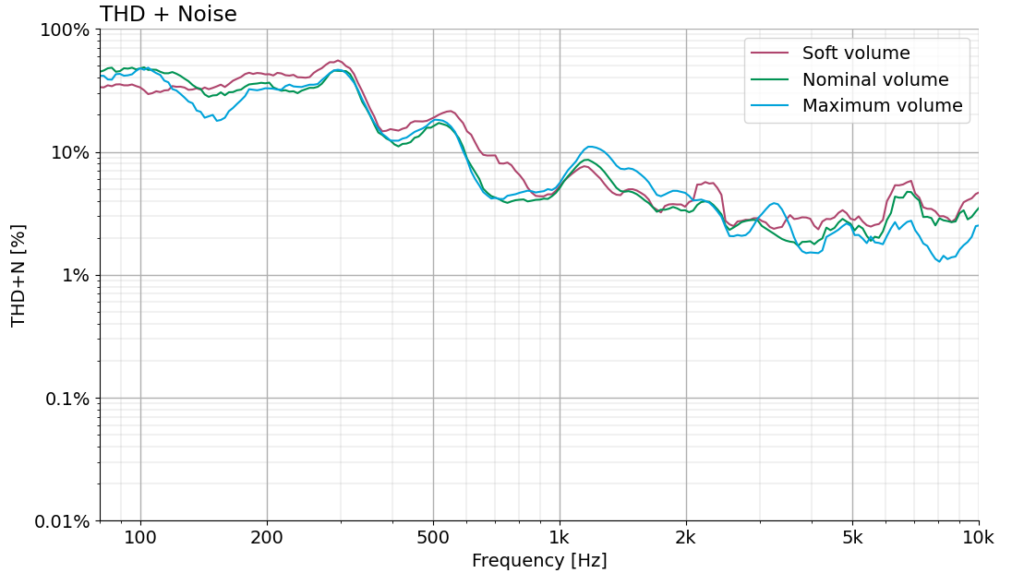

The device under test (DUT) can be secured with a clamp mounted on a stand, or a magnetic holder. In some cases, the DUT is mounted on a custom-made rotating stand, which rotation is automated via computer-controlled servo motors, allowing for precise 360° measurements.

Objective measurements are processed by our engineers using a set of software depending on the measurement type. Frequency responses, directivity and THD+N are processed by custom python libraries developed by our acoustic engineers, following state-of-the-art signal processing methods largely used by the audio industry. Other measurements, like volume measurement, use freeware tools like REW for their straightforward applications. Objective measurements, once processed, are scored using scoring algorithms developed internally and taking into account specific criteria depending on the measurement (flatness/dispersion of the frequency response, loudness values, percentage of distortion by frequency bands, etc.), and carefully selected to best match the user experience and perceptual listening.

Perceptual testing tools

While objective measurements give us hints about how a smartphone may sound, nothing reflects the user experience better than a thorough perceptual evaluation – the human ear is an irreplaceable and complex tool that can provide unique, qualitative information no other tool could provide. Therefore, objective and perceptual complement and reinforce each other.

Keen ear accuracy is an essential skill of our experienced audio engineers, who further receive extensive training upon recruitment. DUTs are evaluated comparatively for playback against up to 5 other devices, as well as studio monitors calibrated as reference. Perceptual evaluations follow a strict protocol articulated around discretized evaluation items to ensure the precision of results, and they undergo a careful two-pass check involving different engineers, to eliminate bias.

Playback evaluations take place in an acoustically treated room. For the most part, devices are mounted on a semicircular arm on a stand, so that all comparison devices are equidistant from the engineer in charge of the evaluations.

Reflective panels are used to enhance spatial features of the devices and improve the quality of evaluations such as stereo wideness and localizability.

Microphone evaluations are performed with studio headphones standardized for all our engineers, and they follow the same rigorous protocol as our playback evaluation.

All the smartphones previously tested in our labs can still be used as reference devices for perceptual evaluation, either for playback, for recording or both. This helps our database of evaluations and scores to stay consistent even after many years of testing.

Audio quality attributes

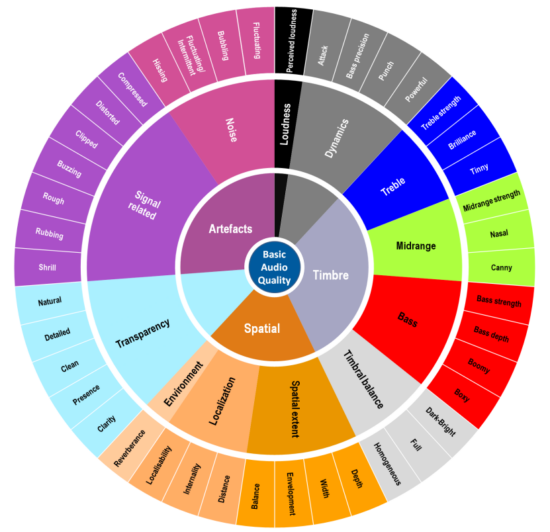

Audio quality attributes have been defined in accordance with the report issued by the International Telecommunication Union (ITU): ITU-R BS.2399: Methods for selecting and describing attributes and terms, in the preparation of subjective tests in a motion to standardize sound defining vocabulary, as illustrated by the wheel below. Dealing with perceptual evaluation means establishing a common understanding of the meaning of the words we use, and their definition.

From the common descriptors, we can make out larger groups that constitute our main audio attributes. These attributes are subdivided into individual constituting qualities that we call sub-attributes.

Timbre

Timbre describes a device’s ability to render the correct frequency response according to the use case and users’ expectations, looking at bass, midrange, and treble frequencies, as well as the balance among them.

Good tonal balance typically consists of an even distribution of these frequencies according to the reference audio track or original material. Tonal balance is evaluated at different volumes depending on the use cases.

In addition, it is important to look for unwanted resonances and notches in each of the frequency regions as well as extensions for low- and high-end frequencies.

Dynamics

Dynamics covers a device’s ability to render loudness variations and to convey punch as well as clear attack and bass precision. Dynamics are the cornerstone of concepts such as groove, precision, punch, and many more. Musical elements such as snare drums, pizzicato, or piano notes, would sound blurry and imprecise with loose dynamics rendering, and it could hinder the listening experience. This is also the case with movies and games, where action segments could easily feel sloppy without proper dynamics rendering.

For a given sound, dynamics information is mostly carried by the envelope of the signal. Let’s take a look at a single bass line for instance: not only would the attack need to be clearly defined for notes to be distinct from each other, but sustain also needs to be rendered accurately for the original musical feeling to be conveyed

As part of dynamics, we also test the overall volume dependency, or in other words, how the attack, punch, and bass precision change based on the user volume step.

In addition, the Signal-to-Noise Ratio (SNR) is also assessed in microphone evaluation.

Spatial

Spatial describes a device’s ability to render a virtual sound scene truthful to reality.

It includes perceived wideness and depth of the sound scene as well as left/right Balance, Localizability of individual sources in a virtual sound field and their perceived distance.

As expected, monophonic playback in a smartphone is usually not a good sign for a good spatial rendition, if not for a good playback performance at all. But many impediments can hinder spatial features, such as inverted stereo rendering, or uneven stereo balance. Thankfully, these problems are less and less common. On the other hand, some sensitive details such as precise localizability or appreciable depth are much harder to fine tune, thus being recurring shortfalls in smartphone audio.

Spatial conveys the feeling of immersion and makes for a better experience whether in music or movies.

In the Recording protocol, capture directivity is also evaluated.

Volume

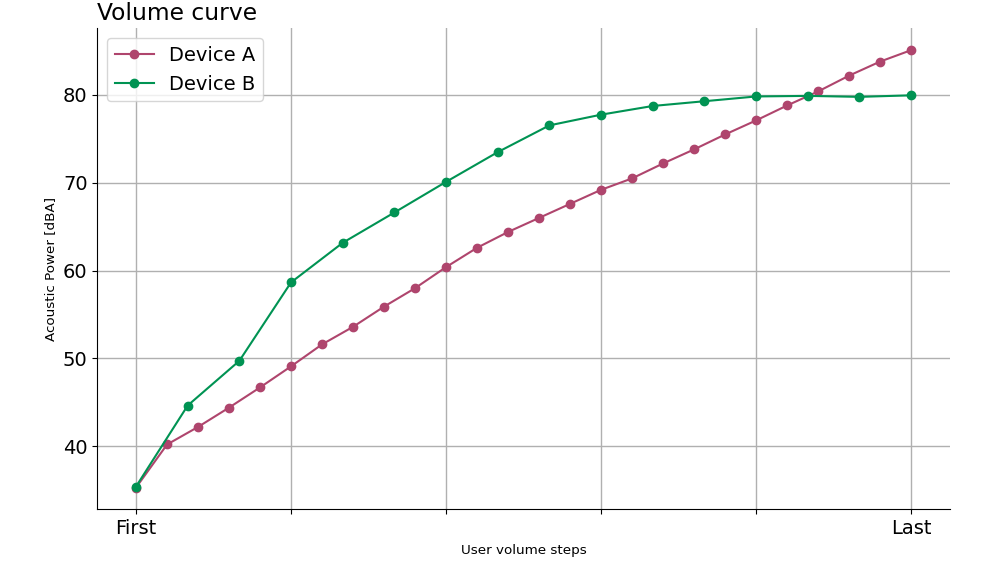

The volume attribute covers the perceived loudness whether in recording or playback, the consistency of volume steps on a smartphone, as well as the ability to render both quiet and loud sonic material without defect. This last item involves both perceptual evaluation and objective measurements.

Here Device A has a good volume consistency, with volume steps homogeneously distributed between its minimum and maximum values, with an almost consistent slope and no discontinuities or volume jumps. On the contrary, Device B has an inconsistent volume step distribution, with no precision in its low volume steps, enormous jumps in volume, and almost 5 identical volumes steps at its maximum volumes.

Artifacts

Artifact refers to any accidental or unwanted sound, resulting from a device’s design or its tuning. Artifacts can also be caused by user interaction with the device, such as changing the volume level, play/pausing, or simply handling the device – which is why we specifically assess a device’s handling of speakers and microphones occlusion. Lastly, artifacts may result from a device struggling to handle environmental constraints, such as wind noise in the recording use cases.

Artifacts are grouped into mainly two categories as they can be temporal (pumping, clicks…) or spectral (distortion, continuous noise, phasing…).

Background

The audio background attribute is specific to the Recording use cases, as it only focuses on the background of recorded content. Background covers some of the audio aspects mentioned above, such as tonal balance, directivity, and artifacts.

Audio protocol tests

DXOMARK’s Audio testing protocols are based on specific use cases that reflect the most common ways in which people use their phones: listening to music or podcasts, watching movies and videos, recording videos, selfie videos, concerts, or events, etc. These use cases have been grouped into two protocols: Playback and Recording. Each use case covers the attributes and sub-attributes that are relevant to the evaluation.

Playback

According to a survey we conducted on 1,550 participants, movie/video viewing accounts for most of the smartphone speakers’ usage, followed by music/podcast listening, and then gaming. Our Playback protocol covers the evaluation of the following attributes: timbre, dynamics, spatial, volume, and artifacts.

Objective tests

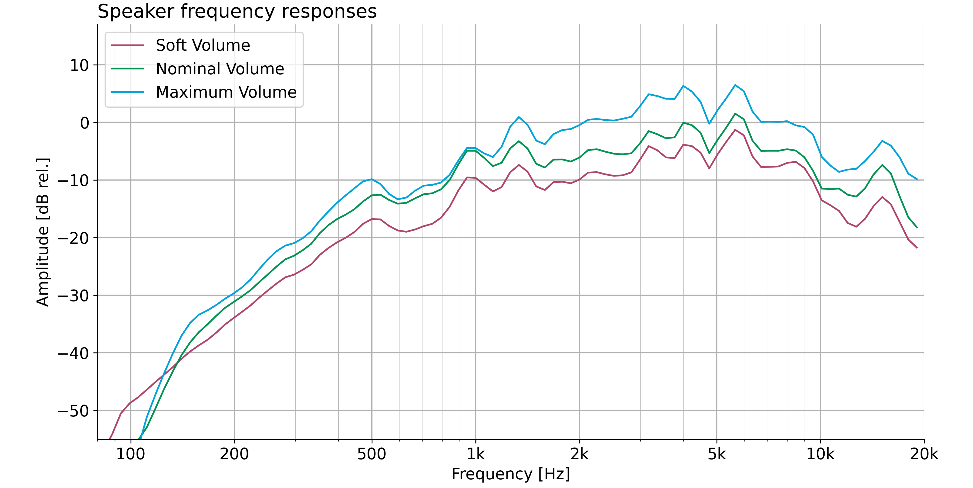

Before our audio engineers delve into perceptual evaluations, any DUT undergoes a series of objective measurements in our labs. Regarding the playback protocol, these tests focus on volume, timbre, and artifacts.

Measurements are done within the anechoic box, which houses an array of calibrated microphones, a speaker, and an adjustable arm to magnetically attach a smartphone from either side. The interior of the box is lined with fiberglass wedges that cover the entire ceiling, floor, and walls, ensuring the dissipation of all energy from sound waves, thus strongly reducing reflections: only direct sound coming from the DUT is captured by the microphones.

Objective tests are done using various synthetic signals (pink noise, white noise, swept sines, multi-tones) as well as musical content.

The table below summarizes the objective tests conducted for the Playback protocol.

| Attribute | Test | Remarks |

|---|---|---|

| Volume | Volume consistency | Sound pressure level (SPL) is measured for each volume step of the DUT using pink noise. Volume steps should ideally be evenly spaced out. |

| Maximum Volume | SPL is measured for different types of signals at the DUT’s maximum volume. | |

| Minimum Volume | SPL is measured for different types of signals at the DUT’s minimum volume (first volume step). | |

| Timbre | Frequency response | Frequency response of the DUT’s internal speakers is measured at three chosen levels: soft, nominal, maximum. |

| Artifacts | THD+N | Total Harmonic Distortion plus Noise is measured at the three previously mentioned levels. |

Movies/Videos

As many users watch videos and movies with the integrated speakers of their phone, this use case has more weight in the playback part of our audio protocol. DXOMARK aims to provide a comprehensive perceptual evaluation focusing on how well the audio content from a movie or a video are rendered by the DUT’s internal speakers.

Tonal balance should be in line with the original material. Voice clarity is particularly important, but we also look at the overall richness of timbre, as well as the precision and impact of the low-end. Volume variations might be important in a movie or video, so we test the DUT’s handling of broad dynamic range, on the lookout for excessive compression.

Using our reflective panels, we assess the wideness of a device’s rendered stereo scene, as well as the localizability and depth of various audio-pictural elements.

Music

Smartphone audio has improved significantly over the past years, and surveys show that a surprisingly large number of users frequently listen to music on their phone’s internal speakers. With this in mind, our Music use case covers an expansive variety of genres.

Evaluation encompasses multiple aspects deemed to be relevant, such as the tonal balance’s truthfulness to the reference track, with proper repartition of bass, midrange, and treble. More often than not, smartphone playback tends to lack a bit of low and high-end frequencies, so we value the extra effort put towards a broader frequency response. We also pay close attention to bumps or notches in the spectrum, and we evaluate the consistency of tonal balance at different volumes.

Dynamics-related qualities such as attack, bass precision, or punch, are evaluated at different volumes as well. For instance, the presence of compression at maximum volume may hinder attack or bass precision, and punch may not be as good at low volume.

As with the Movies use case, evaluation encompasses spatial aspects such as wideness and depth of the rendered stereo scene, as well as localizability of instruments and voice. These sub-attributes are notably tested not only in landscape orientation but also in portrait and inverted landscape.

Maximum volume should be as loud as possible without excessive distortion or compression. Smartphone volume not being loud enough is often commonplace. In the same manner, minimum volume should be quiet enough but still very intelligible.

Gaming

This use case answers to the growing use of smartphones for gaming. With chipsets and RAM performance skyrocketing, mobile games are becoming more and more performant, and so should smartphones regarding their audio capabilities.

DXOMARK’s audio gaming use case aims to evaluate the immersion audio provides to a game, meaning wideness and especially localizability should be on point. Impactful punch and good bass power are also essential.

These sub-attributes are evaluated at multiple volume steps, as the gaming experience must be optimal regardless of level. We look for potential timbre deterioration at maximum volume, as well as artifacts such as distortion and compression.

We also test for speakers’ occlusion during gaming, as the sound coming from speakers might easily be blocked by a user’s hands during intense gaming sessions. This heavily depends on speaker placement on the phone, but also on the mechanical design of the speaker output holes.

Recording

Objective tests

Objective recording measurements focus on up to three attributes: timbre, volume and directivity. Frequency response is computed for the main camera app, and the default memo recorder app. The Max Loudness measurement consists in testing a device’s capabilities at handling very loud recording.

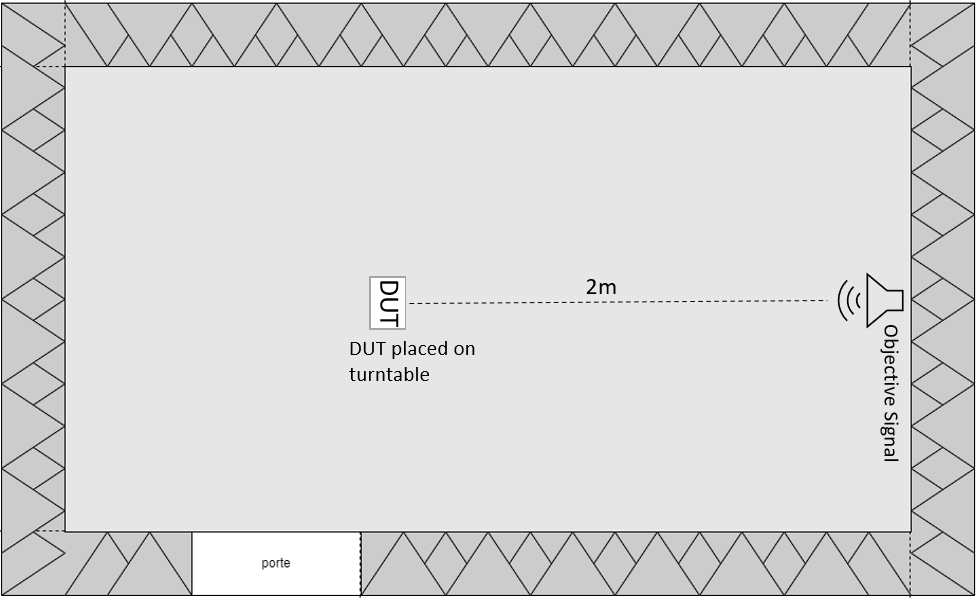

Timbre and Volume tests are conducted within the anechoic box using the speaker within it, while the Audio Zoom objective tests, requiring much more space, are performed within the anechoic chamber

The following table summarizes the tests conducted under the Recording protocol.

| Attribute | Test | Remarks |

|---|---|---|

| Timbre | Frequency response | Frequency response is measured at 80dB SPL in 3 settings: landscape mode + main camera, portrait mode + front camera, portrait mode + memo app |

| Volume | Max Loudness | Phone in landscape orientation, recording with main camera, at 4 different volumes: 94dBA, 100dBA, 106dBA, 112dBA |

| Recorded loudness | LUFS measurement on simulated conditions (Video, Selfie Video, Memo, Meeting, Concert) | |

| Wind Noise | Wind Noise metrics | The phone is placed on a rotating table, in front of a wind machine, with an array of sound sources all around it. |

| Recording voice and synthetic signals, with various angles of wind incidence and wind speeds. | ||

| Audio zoom | Audio Zoom directivity | Phone in landscape mode on a rotating table, measuring the frequency response for 3 zoom values, at each angle (10° step), at 2 meters from sound source. |

Simulated use cases

The simulated use cases are a series of recordings performed in an acoustically treated room using a ring of speakers. Using different combinations of pre-recorded background and voices, it is possible to recreate multiple scenarios relevant to common uses of a device’s microphones.

Simulating these environments allows for consistent recordings, in addition to easing the process of capturing a variety of situations.

The following table goes over the simulated use cases deemed most important:

| Background | Setup | Remarks |

|---|---|---|

| Urban | Video (main camera) + Landscape orientation | Simulating videos filmed in busy urban environments. |

| Urban | Selfie Video (front camera) + Portrait orientation | Several types of voices are played at different angles from the front, side, and rear. Voices are played consecutively and simultaneously, with varying intensity. |

| Urban | Memo app + Portrait orientation | Simulating a memo recorded in busy urban environment. This use cases focuses only on one frontal voice varying in intensity. |

| Home | Video (main camera) + Landscape orientation | Simulating videos filmed in home environments. |

| Home | Selfie Video (front camera) + Portrait orientation | Vocal content is similar to that of Urban use cases. |

| Office | Memo app + Horizontal orientation, face up | Simulating a meeting memo recorded in office environment. This uses case focuses on voices all around the device, which is supposedly placed on a table. Voices vary in intensity and may be played consecutively and simultaneously. |

The recorded simulated use cases are evaluated perceptually by our audio engineers, with user expectations in mind, including attenuation of voices out of the field of view and background noises, clear localization and perception of distance, wide and immersive stereo scene, faithful and natural tonal balance with intelligible speech, among other sub-attributes.

Indoor / Outdoor

The indoor/outdoor use cases are a complement to the previous simulated use cases, in that the recordings are done in situ and not in our labs. These tests focus on intelligibility, recording volume, and SNR. Recordings are done using a specially designed rig to hold up to 4 smartphones at once, and they are performed in either outdoors or indoors settings, with an announcer delivering Harvard sentences clearly at a set distance. The outdoor scenario features passing cars on a nearby road as well as some moderate wind, while the indoor scenario features a vacuum cleaner functioning in the background. For each scenario, the DUT is set up in three specific settings: landscape orientation + rear camera, portrait orientation + front camera, and portrait orientation + memo app.

Concert

As smartphones are commonly used to immortalize concerts and other events, this use case is designed to assess how well a device can handle the recording of music at a very loud volume.

Tests are performed within the anechoic box, where the DUT records a set of musical tracks at 115dBA. Each track features common grounds such as bass, drums, and vocals, but they offer significant differences in terms of genre, instrumentation, and mix.

Since the test conditions are intentionally extreme, one key issue addressed by the evaluation is of course the handling of artifacts, such as distortion, compression, pumping. Tonal balance is also in the limelight, with a special emphasis on musicality. Regarding dynamics, multiple elements are subjects to evaluation, such as overall punch, bass precision, and drums snappiness for instance.

This use case is also an opportunity to test a DUT’s audio zoom capability, by zooming on a specific element: the ability to successfully isolate a single element from the rest in the audio scene (including background noise) is most certainly a cutting-edge feature.

Occlusion

Depending on a phone’s construction, it is not uncommon to accidentally block one or more several microphones while recording. The aim of this use case is to assess how easily microphones can be blocked, and how the DUT’s audio processing handles it.

Recordings are done in landscape and portrait orientations each using front and rear cameras, as well as portrait and inverted portrait orientations when it comes to the memo app. Tests are performed with pre-established sets of hand positioning, while the engineer enunciates a series of sentences.

Perceptual evaluation focuses solely on the undesired effects potentially induced by hand misplacement, or more rarely improper DSP (Digital Signal Processing).

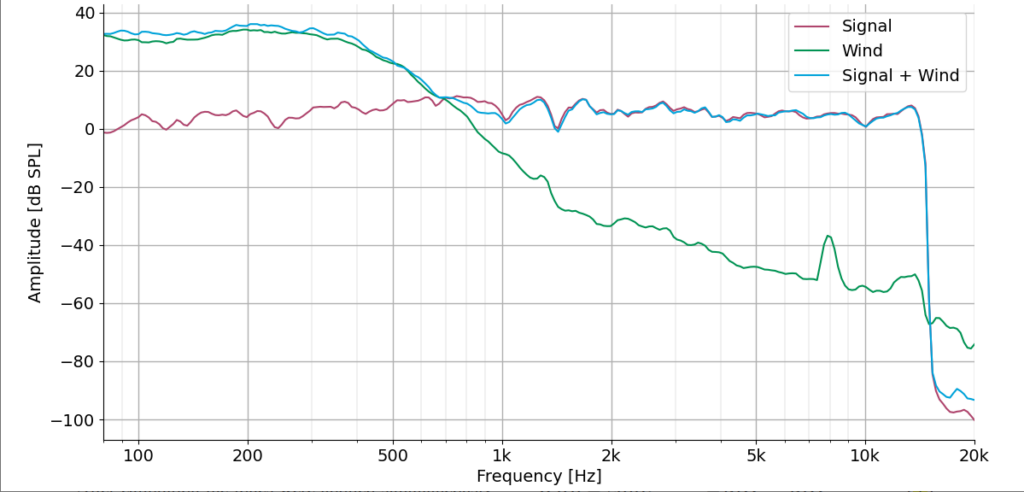

Wind noise

The presence of wind noise in a smartphone recording can be frustrating. Incorporating this use case into our audio protocol meets the increasing attention put towards the reduction of wind noise’s effect in recordings. Manufacturers can achieve these results with DSP, careful internal microphones placement, and usually a combination of both.

To attain consistency and precision in our measurements, the tests are performed in controlled conditions with a wind machine and a rotating smartphone stand, both automated via scripts. Four calibrated speakers are arranged around the rotating stand, so that the test speech is always diffused frontally in relation to the DUT: this way, the incidence of wind can be isolated as a factor. The wind machine is set consecutively at three gradual speeds, in addition to a reference step without wind. Recordings are conducted with three settings: landscape mode and main camera; portrait mode and selfie camera; portrait mode and memo app.

The table below covers the parameters set for the measurement:

| Parameters | Values |

|---|---|

| Use-cases | Video in landscape

Selfie video in portrait Memo in portrait |

| Angles of incidence | 0° (Front facing wind)

90° (Side facing wind) |

| Wind speeds | 0 Hz (no wind) -> Reference recording

3 m/s 5 m/s 6.5 m/s |

In addition to speech sequences, pink noise is used to measure wind rejection ratio. Other objective tests include the calculation of wind energy, and two-tracks correlation giving out reliable SNR values.

But objective measurements are just a small portion of our tests, which are mostly perceptual. Evaluation focuses essentially on intelligibility, with the help of a set of standardized evaluation rules. Artifacts are also considered during the evaluation.

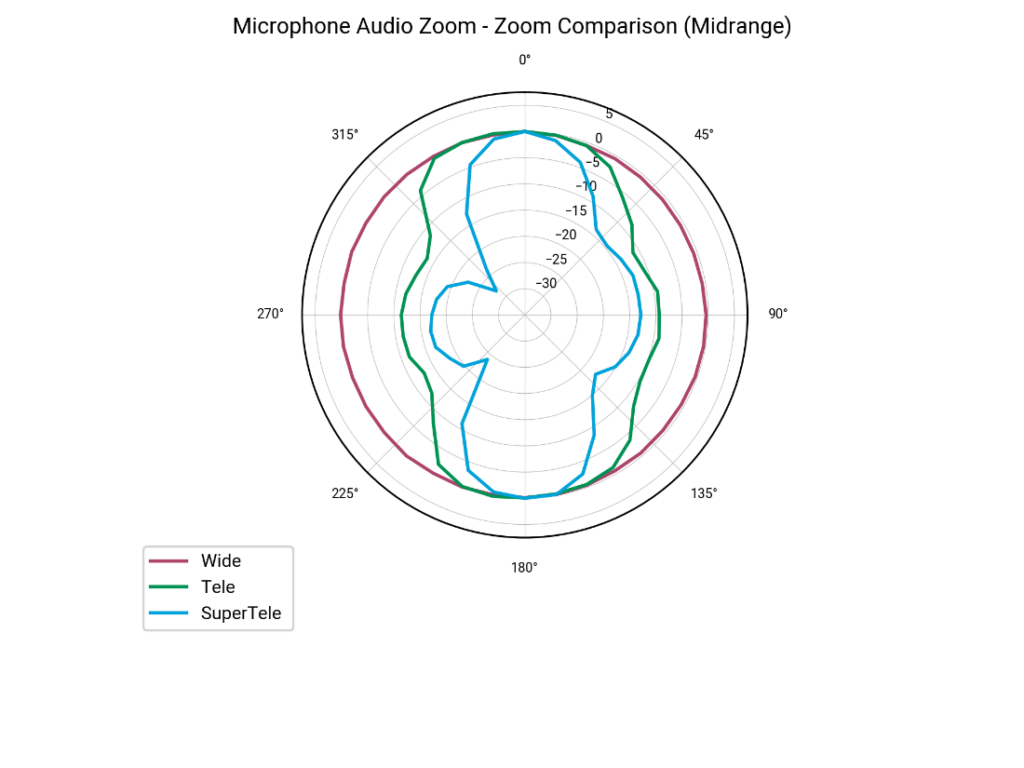

Audio zoom

Audio zoom is a form of audio separation and filtering, which aims to isolate a sound source from its surroundings in accordance with the smartphone camera’s focal point and zoom level. This technology is becoming more and more prevalent in newer smartphones, and it is a notable feature in audio processing that can help manufacturers emerge from the competition.

You can read more about this technology here: dxomark.com/what-is-audio-zoom-for-smartphones

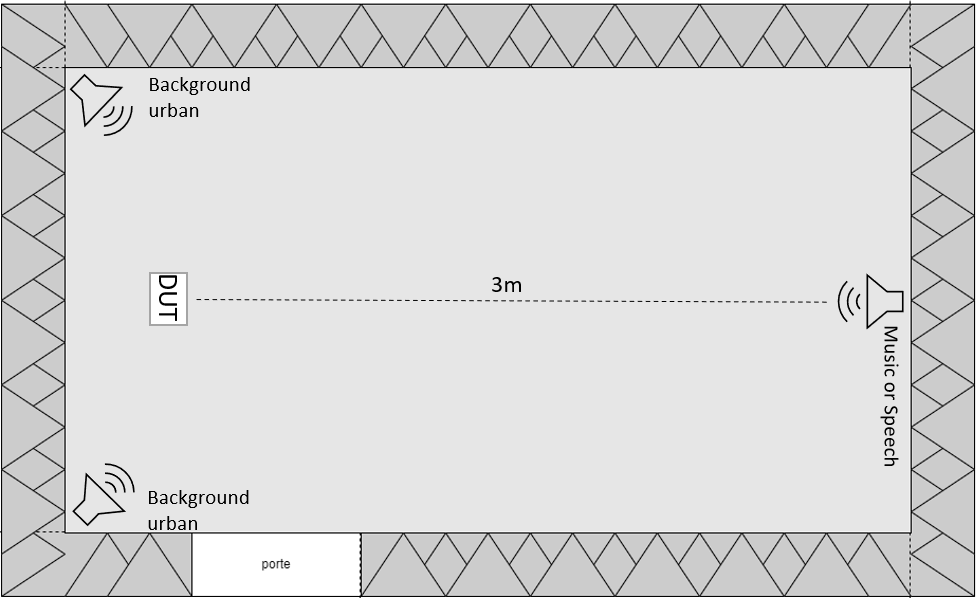

Audio Zoom recordings take place in the anechoic room, with the DUT in landscape orientation with main camera on. A pair of speakers are arranged in the corners of the room behind the smartphone as they emit background noise. One speaker directly in front at a distance of 3 meters, with a dummy head beneath it, handles playback of the main signal (speech, or music).

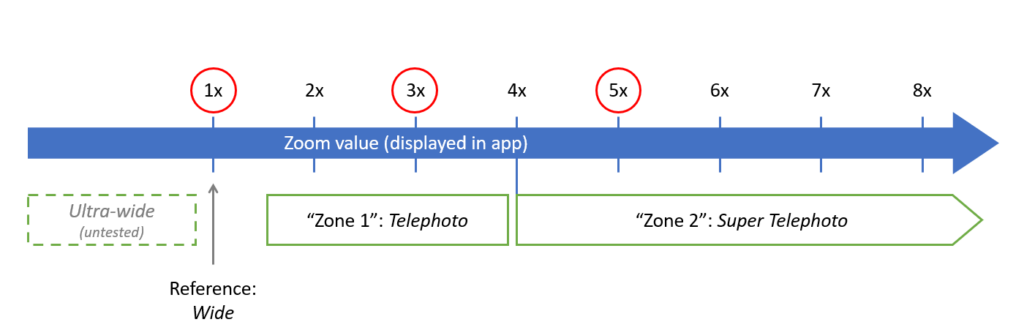

When zooming on the dummy head during recording, a smartphone with audio zoom capabilities is expected to isolate the main signal from the background more and more as zoom level increases. Using our automated rotating stand and a logarithmic swept sine, we measure the DUT’s directivity at three distinct levels of zoom, being: wide (x1), telephoto, and super telephoto. After that, if the DUT is proven to have audio zoom through objective measurements, we then perform a series of tests for each zoom level using two types of signals: speech and music. These recordings are subject to perceptual evaluation by our audio engineers.

Multiple sub-attributes are assessed during the evaluation: side rejection, which corresponds to the strength of the audio separation, volume consistency, which involves rating the correlation between zoom level and volume increment, but also tonal balance. Indeed, it is relevant to check the timbral integrity of the main signal after such processing is applied to it. While some audio zoom implementations are cut for speech, the handling of musical instruments is not always on point; not only can timbre deteriorate, but the DSP may also malfunction and even induce artifacts, which we also consider.

We hope this article has given you a more detailed idea about some of the scientific equipment and methods we use to test the most important characteristics of your smartphone audio.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.