Imagine yourself at a crowded party: the people, the chatter, clinking glasses, and then suddenly, a few words — maybe the mention of your name — draw you into a distant conversation. The two people having it are a few meters away, yet when you focus your attention on them, their words seem to become more understandable, clearer, even louder maybe, with the rest of the cacophony subtly fading away.

This ability of our brain to do this, studied in psychoacoustics, is called the Cocktail Party Effect. Given how useful it is, it was only a matter of time until the smartphone industry emulated it, bringing it to our fingertips under the official term of Audio Zoom. Here’s how Samsung describes the feature: “With the Zoom-In Mic, you can use a pinch gesture on the screen while recording video to zoom in or out, and as you zoom in, the sounds of the subject you zoom in on become louder. Zoom out, and the surrounding sound is no longer suppressed.”

Along with Samsung, Apple also has equipped its latest device, the iPhone 11 Pro, with this technology. But the iPhone 11 Pro and the Galaxy Note 10+/20 Ultra are not the only devices featuring an Audio Zoom: the first instance goes all the way back to 2013, with the LG G2, followed by the HTC H11 in 2017. Nokia’s Audio Zoom technology is featured in the brand-new Oppo Find X2 and X2 Pro; and most recently, the newly-announced Huawei P40 series as well as the OnePlus 8 Pro also boast audio zoom.

“Audio Focus technology enables the selection of desired direction and attenuates the incoming sounds from other directions. When the strength of this effect is controlled dynamically — for example, together with the video camera’s visual zooming — then the effect is called Audio Zoom.” — Nokia’s Audio Zoom description

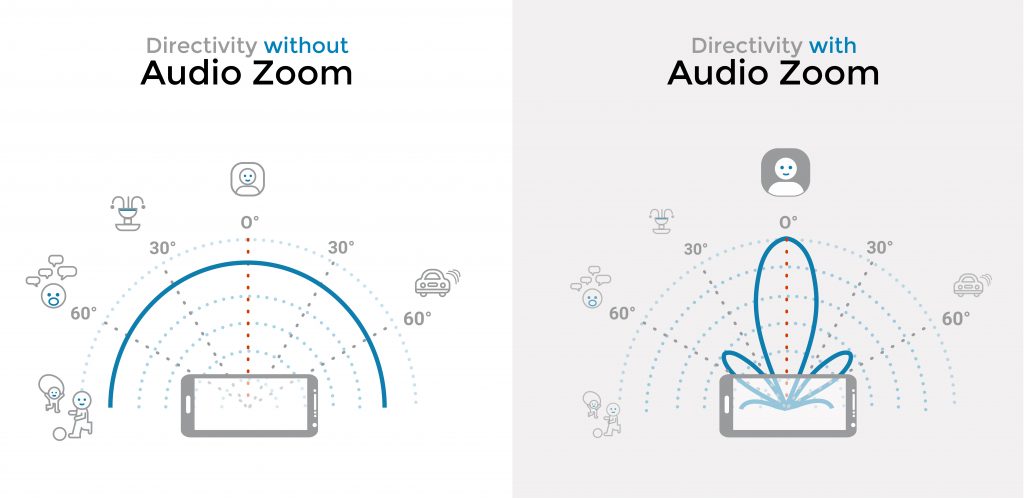

The main technology behind audio zoom is called beamforming, or spatial filtering. It allows changing an audio recording’s directivity (that is, the sensitivity according to the direction of the sound source) and shape it in any way necessary. In this case, the optimal directivity is a hypercardioid pattern (see illustration below), which enhances sounds coming from the front direction — that is, from the direction in which your camera is pointed — while attenuating sounds from all other directions (your background noise).

The starting point for this technology is an array of omni-directional microphones that have to be spread as widely as possible: the more microphones, and the further apart they are, the greater the possibilities. When the device is equipped with two microphones, they’re usually placed at the top and at the bottom to maximize the distance between them. The signals captured by the microphones are then combined in an optimal manner so as to produce hypercardioid directivity.

This highly-directional result using non-directional receivers is achieved by setting different gains on each microphone depending on its position in the device, and then constructively adding the phase for frontal waves (to enhance the desired sound) and destructively for side waves (to attenuate off-axis interference).

That’s the general theory, at least. In practice, beamforming in smartphones comes with its own set of complications. For one thing, the technology used in mobile devices can’t rely on large studio condenser mics, but rather on electret transducers — tiny microphones classified as MEMS (microelectromechanical systems) that require very little power to function. In order to optimize intelligibility as well as to control the characteristic spectral and temporal artifacts arising from spatial filtering (such as distortion, loss of bass, and a high-end “phasey”/nasal overall sound), smartphone manufacturers not only have to carefully consider microphone placement, but must also rely on their own unique combinations of sound effects such as equalization, voice detection, and gating (which can themselves result in audible artifacts).

So in all (industrial) logic, each manufacturer comes up with its own beamforming recipe, combined with proprietary technologies. This means that there are different beamforming techniques, each one with its strong points, from speech dereverberation to noise reduction. However, beamforming algorithms can easily amplify wind noise in captured audio, and protecting MEMS with an external windscreen is not exactly within anyone’s reach — nor desire, for that matter. Why not sugarcoat the microphones inside the smartphone then, you wonder? Well, doing this impairs the frequency response and the sensitivity of the microphones, hence manufacturers’ preference to rely on software when it comes to noise and wind reduction.

Additionally, there are no good technical solutions (yet) for simulating realistic wind noise in natural acoustic environments in laboratory conditions. So manufacturers have had to develop their own digital windscreen technologies (which can be applied regardless of the limitations of the product’s industrial design) based on the evaluation of audio recordings. In the video below, you can hear Nokia’s OZO Audio Zoom in action, assisted by its Windscreen technology.

Just like noise cancelling and many other popular technologies, beamforming was originally developed for military purposes. Phased transmitter arrays were used as radar antennas in World War II, and are nowadays used in applications ranging from medical imaging to music festivals. As for the phased microphone array, it was invented by John Billingsley (no, not the actor playing Doctor Phlox in Star Trek: Enterprise) and Roger Kinns in the 70s. While the method implemented in smartphones hasn’t seen dramatic improvements in the past decade, the evolution of the smartphone itself, irrespective of any audio consideration, has allowed audio zoom to become more effective, thanks to super-sized devices, multiple microphones, and ever more powerful chipsets. Remember: The greater the number of microphones and the further apart they are, the greater the possibilities.

In their thesis “Acoustic Enhancement via Beamforming using Smartphones,” N. van Wijngaarden and E. H. Wouters wrote: “The possibility of a surveillance state (or company) using ad hoc beamforming techniques to snoop on all its residents springs to mind. But how much would a smartphone beamforming system contribute to any level of mass surveillance? […] Theoretically, if the technology becomes more mature, it may become a weapon in the arsenal of surveillance states. But the technology is not remotely there yet. Ad hoc beamforming on smartphones is still relatively uncharted territory and the lack of silent, unnoticeable synchronization options kills off the potential to listen in discreetly.”

So good news for our privacy — at least for now. And until our smartphones become acoustic surveillance shotguns, here is a video we made with Samsung’s Galaxy S20 Ultra so you can see — and hear — for yourself how audio zoom sounds… and that optimal quality for all use cases is still a major challenge.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.