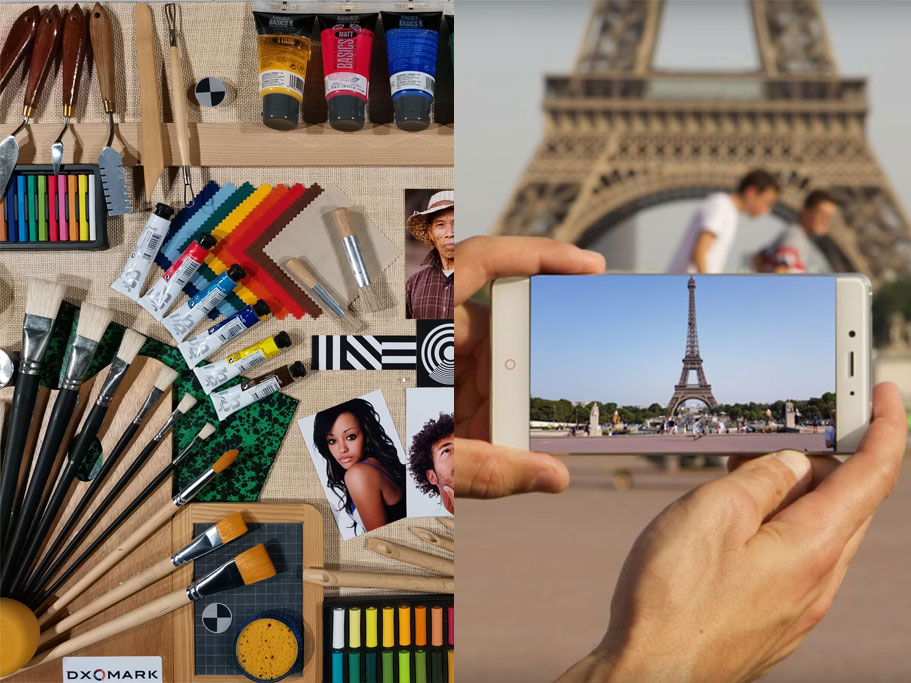

Objective lab-based image quality testing methods used by DXOMARK and many manufacturers and other organizations in the mobile and imaging industries have become more and more sophisticated over the years, allowing us to assess a broad array of image quality attributes in a controlled and repeatable environment.

However, there are reasons why it makes sense to complement objective lab testing with additional methods to achieve even better quality results.

Modern mobile imaging systems are very complex, incorporating more and more content aware processing. Consequently, image results depend a lot more on the content of the scene than used to be the case for conventional cameras in the past. For example, machine learning technology can be used to detect the subject in a scene and improve AF tracking capabilities, especially when it comes to pets. It is therefore important to assess the image quality using as wide a set of scenes as possible to cover as many use cases as possible. A broad array of different conditions can be created in lab settings (type of light, lux levels, scene content); however, real-life situations are infinitely more varied than even the most sophisticated lab setups.

Objective measurements are designed to analyze a well-defined set of attributes. However, every new device generation introduces new image processing algorithms and technologies, and image results can include unpredictable elements that are difficult to anticipate. For example, ghosting artifacts through frame stacking or spurious loss of texture are examples of artifacts we have seen only on devices from the most recent generations. It is impossible to design objective tests in advance for those new artifacts, so we still need alternative methods to spot those unpredictable occurrences.

Most of the time the results of our objective tests are representative of a real-life experience, but on those occasions where this is not (or is not entirely) the case, perceptual assessment allows us to complete the picture. Perceptual testing complements objective lab testing and ensures that all unpredictable camera behavior is detected. It also allows us to cover more scenes and shooting conditions, widening our test protocol and allowing us to capture and analyze more image quality data. Perceptual testing essentially makes the DXOMARK Camera test protocol even more robust and reliable.

What is perceptual testing?

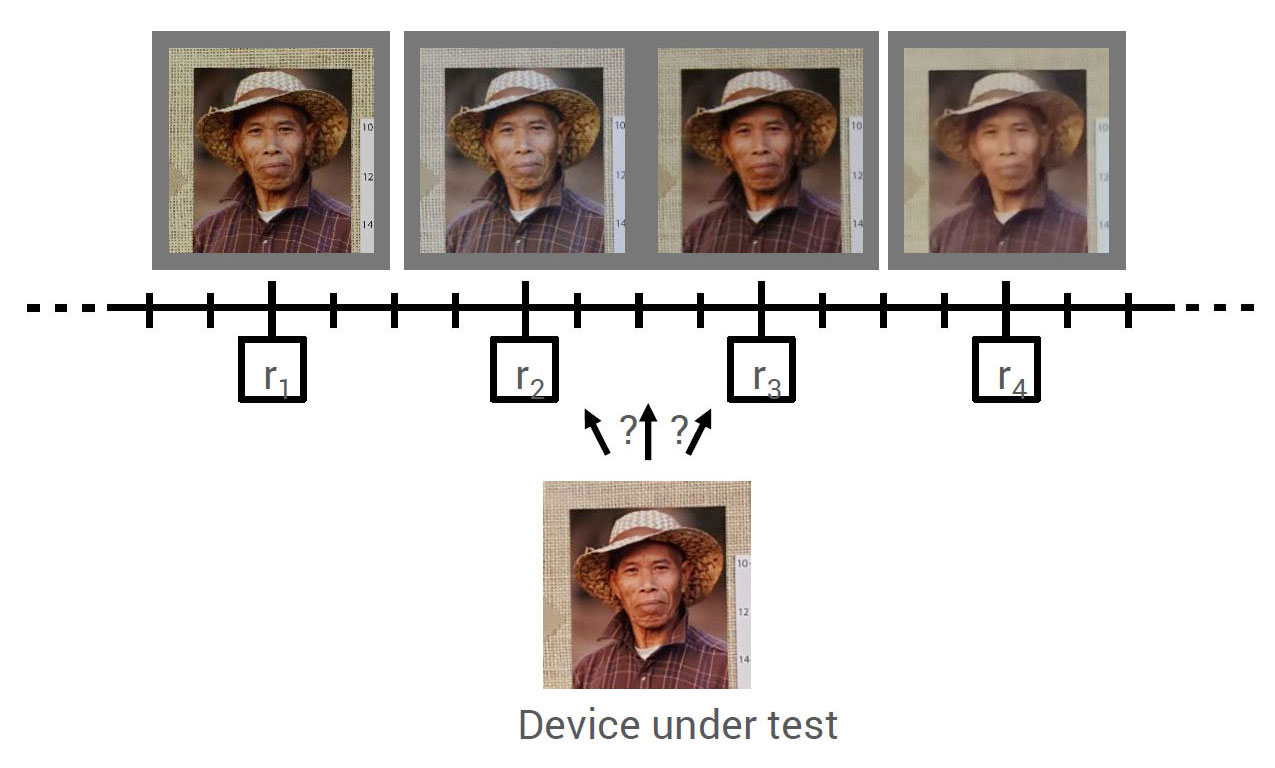

Let’s start by saying what it is not: perceptual analysis is not the same as subjective analysis. Rather, perceptual analysis is the evaluation of image quality attributes by human operators, using a stringent methodology to ensure unbiased results that are of equal quality to those obtained through objective testing methods. At DXOMARK, all perceptual evaluation is undertaken by engineers and technicians who are image quality experts and have years of experience in the field.

DXOMARK’s perceptual analysis methodology includes two components:

- The shooting protocol defines which scenes to shoot for our testing and exactly how to capture the images of each scene.

- The analysis protocol defines which image quality features to analyze and exactly how to perform the analysis.

These protocols have been designed to meet the following requirements:

- Neutrality: results of perceptual analysis have to to be independent of the human operator—that is, different operators have to produce identical results when evaluating the same device, and all devices go through exactly the same testing and analysis procedures.

- Relevance: perceptual analysis has to focus on image quality aspects that are relevant to consumers and photographers.

- Reliability: perceptual analysis has to be independent of shooting conditions such as weather or light conditions and reliably deliver consistent results.

- Comprehensiveness: perceptual analysis has to include all image quality attributes that are necessary for evaluating the device under test.

Examples

So while objective testing provides a lot of information about camera image quality, we need additional perceptual testing to cover unpredictable camera behavior, widen the test protocol in order to include as many shooting situations as possible, and ultimately make the DXOMARK Camera scores even more relevant. Let’s take a look at a few examples of image quality attributes where perceptual testing is used to complement objective test results.

Exposure

To objectively test exposure, we use a range of test charts in our lab to reproduce as many shooting scenarios as possible under controlled conditions. We take measurements using a range of different light levels, from almost complete darkness to very bright, and with several types of light sources, simulating daylight, tungsten, and fluorescent illumination.

Our set of lab test charts for exposure covers many typical use cases with various lighting conditions and levels of contrast. We continuously work on expanding the scope of our objective testing; however, it is impossible to anticipate every possible lighting situation in the lab, which is why we designed a complementary perceptual shooting plan to assess camera performance in challenging high-contrast outdoor scenes or for backlit portraits, among others.

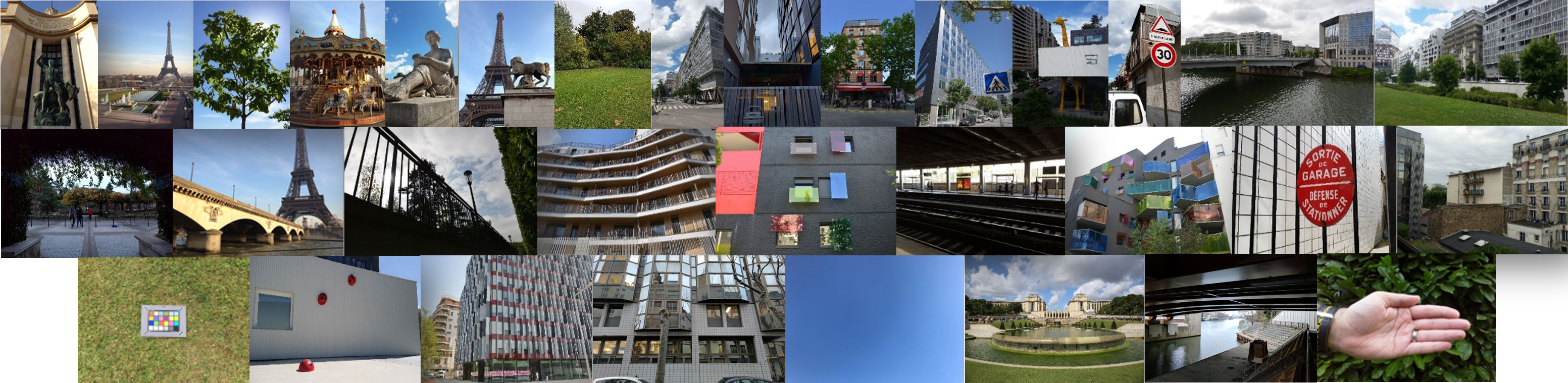

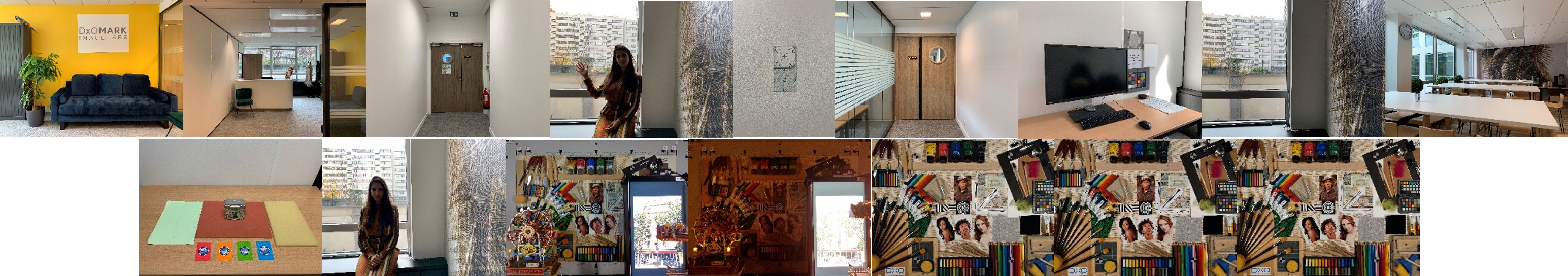

We evaluate outdoor and indoor exposure using DXOMARK’s extensive perceptual database of real-life scenes, all of which require following precise shooting and framing instructions.

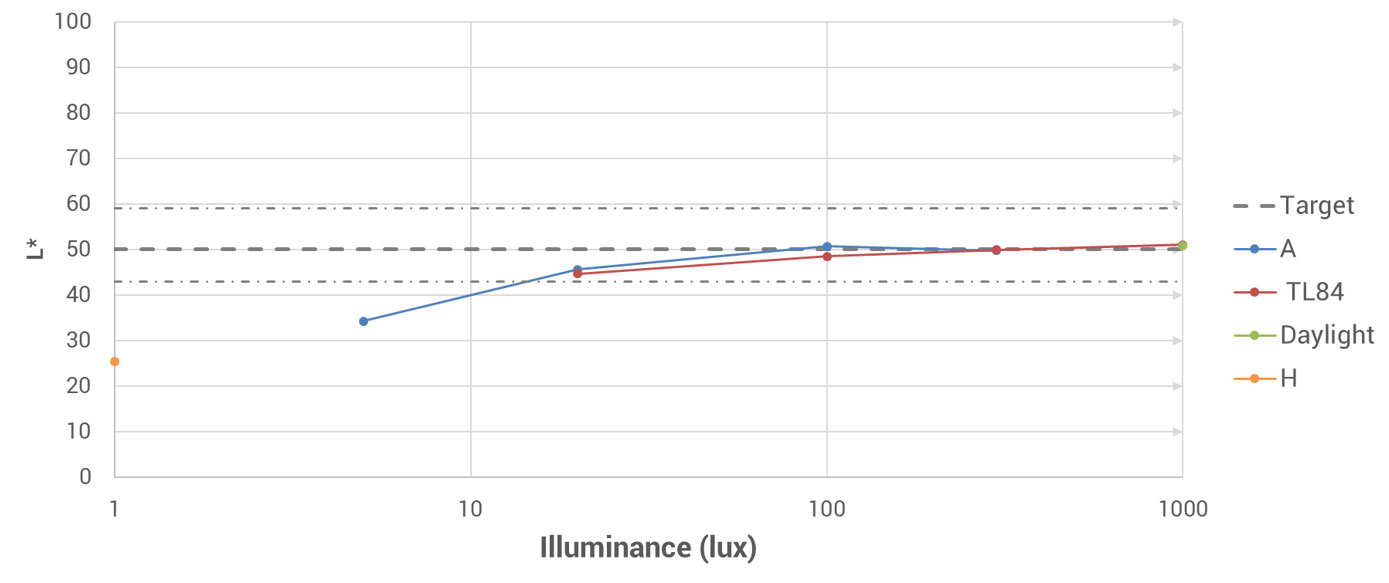

Let’s have a closer look at some samples to illustrate how perceptual evaluation complements our objective tests. In the graph below, you can see the results of the objective exposure tests for the Apple iPhone XS Max. Results are exactly on target in bright light and under indoor light conditions, with some underexposure measured only in low light.

The results of the objective test above are pretty much confirmed by the real-life results in our perceptual database. The XS Max delivers accurate exposure in almost any outdoor and indoor situation we have tested, as in the three examples below.

However, using our perceptual methods we also found that the XS Max exposure system can struggle in certain unusual and challenging scenarios — for example, shaded foreground subjects in front of a bright background. In the outdoor samples below, the subject is in the shade and occupies a fairly small portion of the frame. In the background there is a bright sky and a distant secondary subject (the Eiffel Tower). This is a difficult scene to deal with for any exposure system, but the Samsung Galaxy Note 10+ 5G handles it visibly better than the iPhone, achieving much better exposure on the subject in the foreground.

The situation is similar for the indoor scene below. As in the previous sample, the camera has to deal with backlit foreground subjects. In this case, however, the subjects take up more space in the frame. It is difficult to achieve good exposure on the subjects without clipping large portions of the bright background. But as you can see, the Huawei P30 Pro deals better with this challenge than the XS Max.

The two exposure samples share a similar scene composition that is not covered in any standard lab testing, but we can still detect exposure issues like the one illustrated above thanks to perceptual testing.

Color

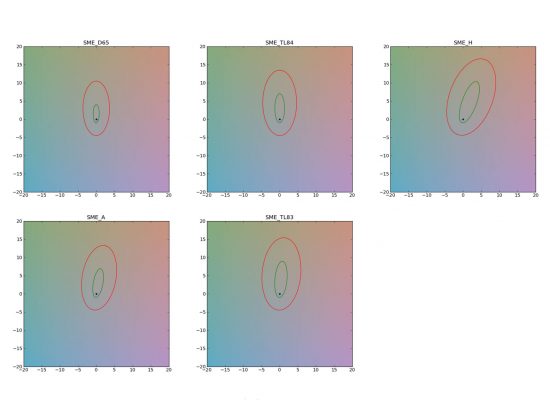

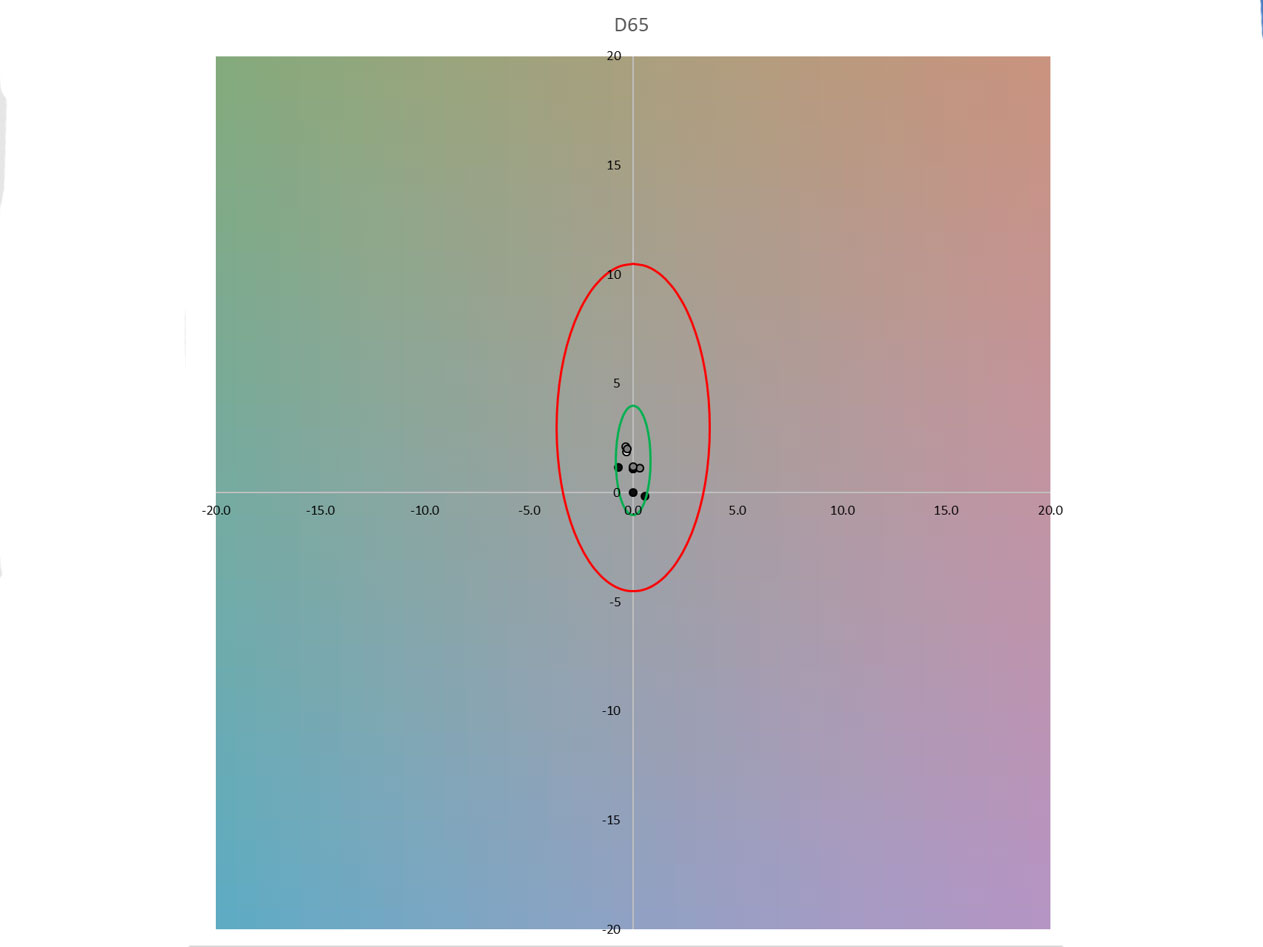

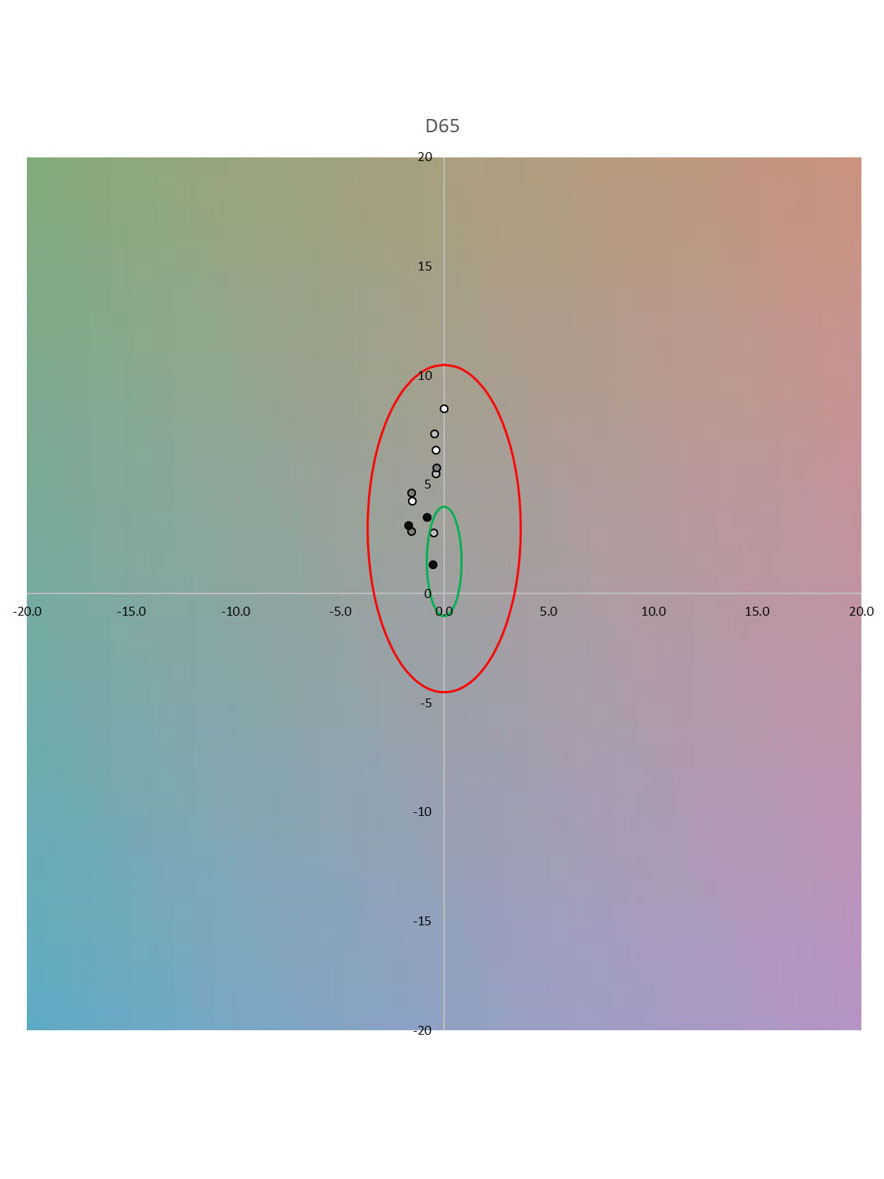

For our objective tests of color, we use a calibrated ColorChecker chart. After capturing our test images, we measure the tint and saturation of the 18 colored patches and check for white balance casts using the six neutral patches at the bottom of the chart, with results presented in an ellipsoid format. The best results for saturation, tint, and white balance are located inside the green ellipsoid; the worst results are plotted outside the red one.

Test charts like the ColorChecker can include only a limited number of colors, which is why we use real-life scenes to complement our objective testing and expand the amount of data we captured and analyze.

Like for exposure, objective color tests are in line with perceptual testing results most of the time. In the example below, you can see that most data points for the Samsung Galaxy S10+ in bright light are plotted within the green ellipsoid, meaning that the lab test images show neutral white balance. This is confirmed by the real-life samples from the DXOMARK perceptual database.

However, occasionally results from objective and perceptual testing do not fully align because the lab scene cannot cover all real-life scenarios. For example, the white balance graph for the Apple iPhone XS Max shows that greenish white balance casts are visible in bright light, but this is not always noticeable in real-life shots. The late-afternoon outdoor portrait shows a slightly warm but acceptable cast, and the image of the Eiffel Tower is quite neutral.

Mixed lighting situations are another complex scenario that many cameras find difficult to deal with, so using perceptual testing to broaden the scope of testing and include several mixed-lighting scenes is a good way of making sure DXOMARK results match the real-life experience.

Autofocus

We undertake our objective DXOMARK Autofocus tests in the lab using a custom-built setup that includes a Dead Leaves chart as the focus target, as well as a motorized refocus trigger that we synchronize with a digital camera trigger and a universal timer. We place the refocus trigger between the camera and the focus target to defocus between shots, then move the refocus trigger out of the way for the test itself. We program the refocus trigger to shoot after a delay of 500ms, and we repeat the same test in low-light conditions with a 2000ms delay.

The test is designed to measure how long it takes a camera to acquire focus, the time it takes to focus, and the repeatability of the focus. We perform these tests using several types of illuminants and at various light levels.

For our autofocus tests, perceptual testing allows us to cover additional shooting situations, with varying subject distances, lighting directions, and dynamic ranges, among other parameters.

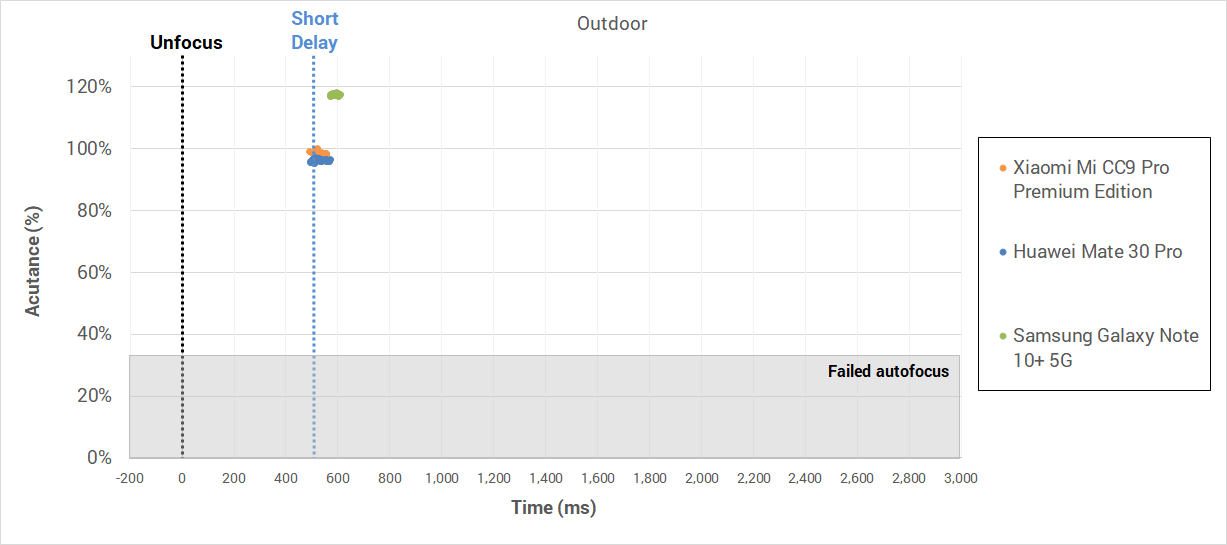

Let’s look at a few examples. Below you can see the objective autofocus test results for the Xiaomi Mi CC9 Pro Premium Edition, the Huawei Mate 30 Pro, and the Samsung Galaxy Note 10+ 5G in bright light.

As you can see, all three devices perform very well, producing sharp results with only a very minimal delay (less than 100ms) after triggering the shutter. Perceptual testing also allows us to detect those edge cases where the systems don’t function perfectly. For example, with the subjects at a longer distance from the camera, such as in the samples below, the Mi CC9 Pro Premium Edition has a tendency to focus on the background. The effect is slightly exacerbated by the device’s large image sensor and therefore comparatively narrow depth of field. The Mate 30 Pro and the Samsung Note 10+ both render the subjects sharp and in focus.

Errors can also occur at close distance. When capturing the scene below, the Xiaomi and Samsung cameras focus correctly on the subjects; but interestingly, in this particular situation, the Huawei Mate 30 Pro camera focused on the background rather than on the couple in the front. Overall, it’s fair to say that good results in the lab are a good indicator for good overall autofocus performance, but failures can still occur in specific challenging use cases.

Other challenging scenarios include complex (and potentially moving) subjects, such as pets, combined with difficult high-contrast or backlit scenes (for example); and group scenes where depth of field can come into play, and when the focus point should keep as many subjects in focus as possible.

The group shot below was captured with an Asus ZenFone 6, which focused on the person closest to the camera; as a result, the subject at the back of the group is out of focus. It would have been a better strategy to focus on the subject that is second-closest to the camera to keep as many elements as possible in focus. In the image on the right, captured with a Samsung Galaxy Note 10+ 5G, we can see that the focus system was confused by the very complex scene and focused on the brighter background instead of the pet in the foreground.

Texture and Noise

We also use the Dead Leaves chart for texture and noise measurements in the lab. We measure texture on the actual dead leaves pattern and measure noise on the surrounding gray level patches, taking measurements at light levels from 1 to 1000 lux and using a variety of light sources. However, as varied as these test conditions are, there still are plenty of use cases that are not covered—for example, texture in high-contrast scenes or on moving subjects, as well as noise on textured and colored image areas (to name a few).

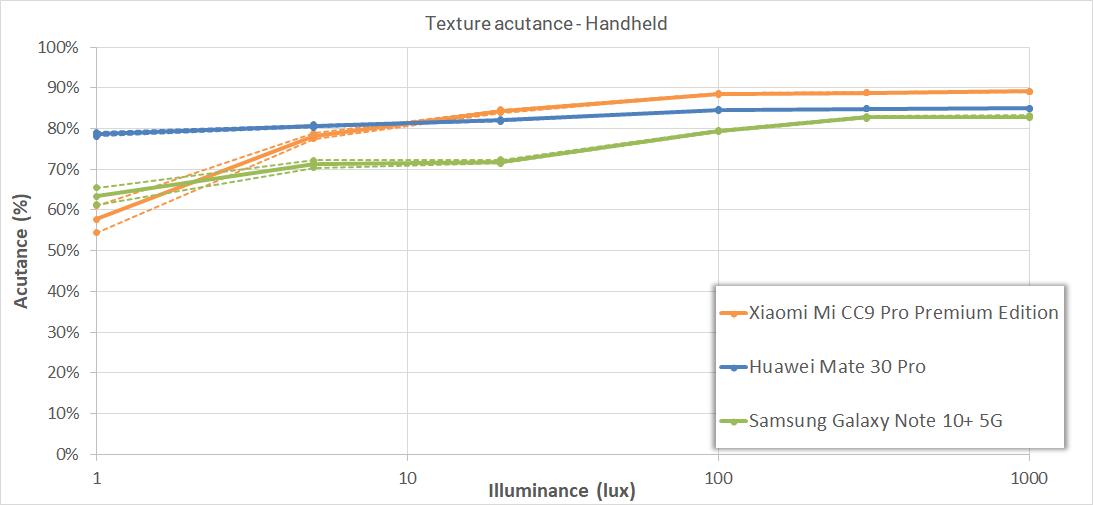

Let’s have a look at an example for texture evaluation. Our lab measurements show that the Xiaomi Mi CC9 Pro Premium Edition has higher levels of detail than the Huawei Mate 30 Pro and the Samsung Galaxy Note 10+ 5G in bright and medium light:

Looking at the real-life samples below, we can see that the Xiaomi device does indeed render noticeably better detail than the Huawei and the Samsung. However, the difference between the Mate 30 Pro and the Note 10+ 5G in bright light is bigger than the objective results suggest. In this instance, the objective test results have provided the right order among the comparison devices, but for the specific sample scene below, not the scale. By complementing objective tests with perceptual evaluation, we can fine-tune the results and take into account analyses from a much wider range of scenes.

HDR scenes are another good example for a type of scene where perceptual testing contributes to making test results more robust and reliable. Current objective tests for texture are geared towards low- to medium-contrast scenes. Texture results for HDR scenes can be very different than for lower-contrast scenes, however, which is why we use perceptual tests to complement objective tests. In the sample below, you can see that the Huawei P30 Pro captures much higher levels of detail on the face of the model in the low-contrast indoor shot than in the backlit high-contrast image.

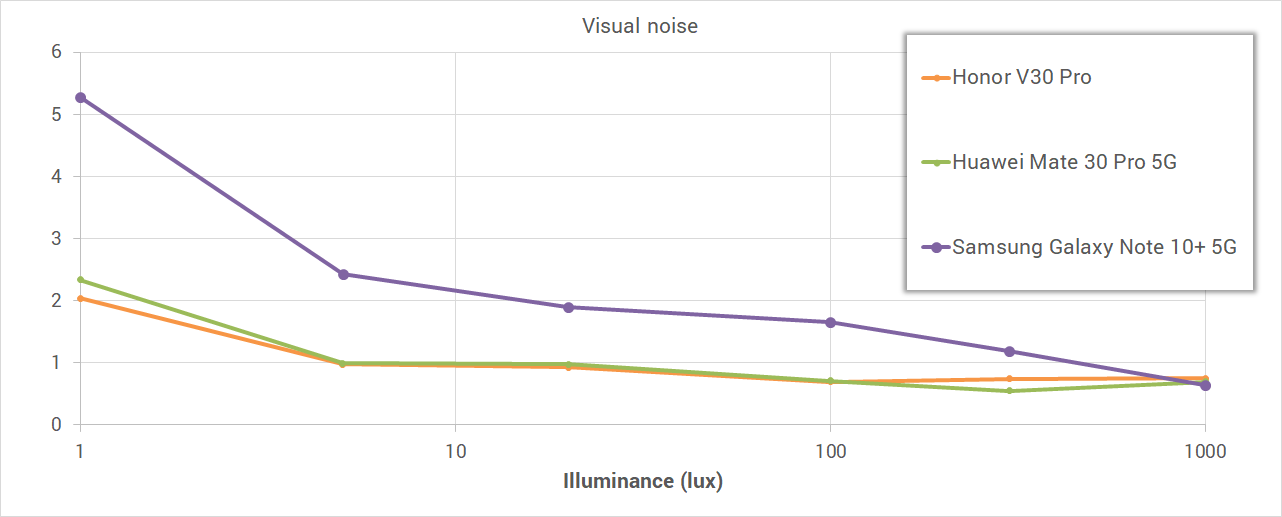

Similar situations exist for noise testing as well. The objective test results for noise below show that the Honor V30 Pro and Mate 30 Pro images have lower noise levels than the Samsung Galaxy Note 10+ 5G in almost all light conditions except very bright light.

The objective results are confirmed by many scenes in our perceptual database, such as the indoor shot below. The Honor and Huawei show similarly low noise levels in this scene, while the Samsung produces noticeably more noise.

All three devices show low noise levels in bright light, which again is in line with the objective test results. However, the Samsung camera has slightly more noise in the shadow areas of this high-contrast scene.

Moving subjects are another use case that is difficult to reproduce in in the lab. In the image below, you can see that the Apple iPhone 11 Pro Max image shows stronger noise on the moving cyclist than on the static elements of the scene. There are multiple possible explanations for this, most of them most likely linked to the need for faster shutter speeds to freeze the motion in the scene.

Conclusion

So what should you take away from this article? Well, principally, that objective testing is very accurate and efficient, but perceptual testing helps make image quality testing even more reliable and robust by expanding the number of test scenes and providing the ability to detect unexpected or thus far unknown camera behavior. It is this essential combination of objective and perceptual testing that ensures that our test results are as relevant as possible to smartphone users all over the globe.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.