At DXOMARK we have watched over the years as smartphone cameras have gone from being a novelty to becoming the world’s most popular way of capturing photographs. In a keynote session at Electronic Imaging 2020, our CEO and CTO, Frederic Guichard, began by providing a historical perspective on the rise of smartphone photography and how it has been made possible by impressive advances in technology. He then demonstrated how smartphone cameras compare today with current standalone digital cameras, and how they have different strengths and weaknesses. Finally, he presented a case for the roles of smartphones and cameras, and speculated about how they are likely to evolve in the future.

In this article, we share his analysis, along with some of the images he used to illustrate the history, strengths, and weaknesses of both smartphone and standalone cameras.

There is no better illustration of the increased popularity of smartphones for photography than these shots of groups of photographers. Ten years ago they were full of people with various compact cameras and DSLRs. Now, almost all you see are smartphones.

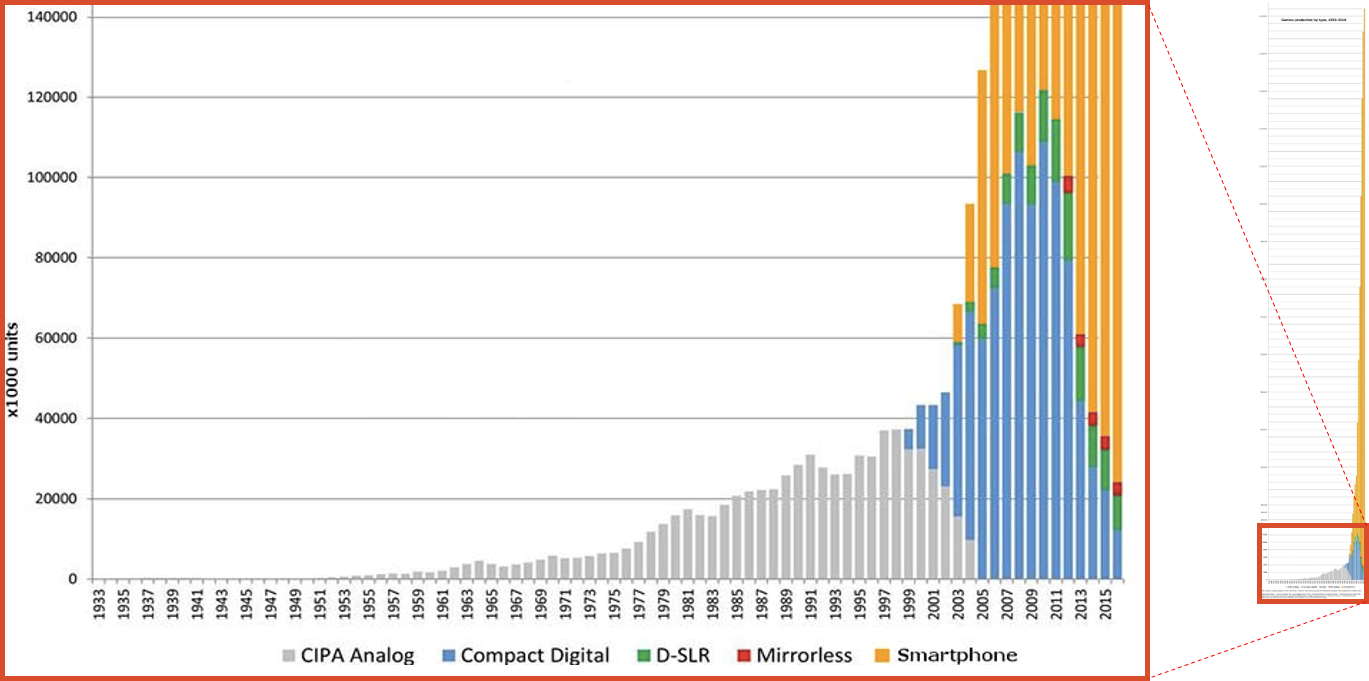

The crossover occurred about the same time we introduced our DXOMARK protocol in 2012—by 2011 more than a quarter of all photographs captured were taken using smartphone cameras. By 2015, over one trillion photos were being captured each year, with the vast majority of them coming from smartphones.

The sheer number of photos taken on smartphones is one obvious result of their increasing market share overall. By 2013 they were outselling digital cameras of all kinds by a factor of more than 10 to 1. Initially, it wasn’t obvious that this transition would happen so quickly—and it certainly caught many camera makers flat-footed. But in hindsight it is straightforward to see what caused the rapid adoption of smartphones for photography.

Convenience and ease-of-use made the smartphone the top choice for photography

The first major factor that helped make smartphones the camera of choice for most people was simply that they became essential tools for daily life. As a result, nearly everyone had one, and had it with them all the time. As the famous saying goes (made even more famous by iPhone icon Chase Jarvis), “The best camera is the one you have with you.”

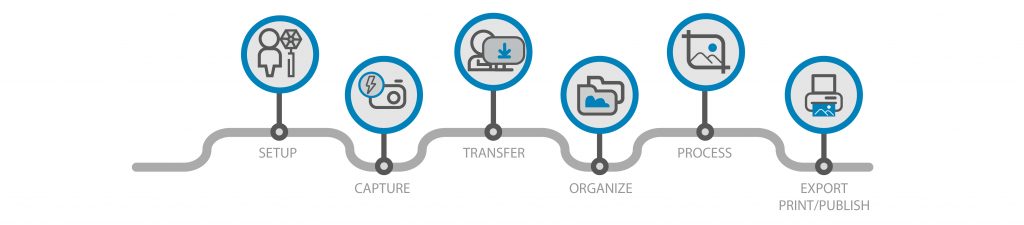

Just as importantly, smartphones revolutionized photographic workflow. Doing anything with photos captured on a traditional digital camera typically requires a lot of effort, and often a complicated set of steps:

With cloud-connected smartphones and increasingly intelligent cloud photo sharing sites, it was no longer necessary to manually upload your images onto a computer, organize them by hand, and finally process and share them. They could be shared right after they were captured—with only a couple taps and in about that many seconds. This was particularly true for the type of casual photography that is most popular with smartphone users. Typically shots are taken with little or no setup, and while using the default settings picked by the camera app. Post-processing is also usually minimal, allowing for quick sharing.

Quality followed quantity in smartphone photography

The rapid rise of smartphone photography got a lot of people more interested in their photographs and more demanding about capturing high-quality images. Smartphone makers responded by putting an increasing emphasis—and substantial investment—into improving their cameras and image processing systems.

Full-frame camera or smartphone image—can you tell?

In an increasing number of cases it is hard to tell the difference between a photo taken with a smartphone and one of the same scene captured with a full-frame camera. The simple clues that used to give smartphones away aren’t always reliable any more. Below are a pair of images, one taken with a Google Pixel 3 and the other with a Sony a7R III. Can you tell which was taken using a phone?

First, it’s worth noting that both images are quite impressive for a night scene. We expect that from a full-frame camera, but it is an impressive achievement for a smartphone. Looking closer we notice some loss of detail in the water in the image on the left. Perhaps it is natural foreground blur from the optics of the full-frame camera, or is it maybe motion blur from the smartphone? On the right there is amazingly good detail preservation throughout the image, even in very low light, so it would be easy to conclude that it couldn’t possibly be from the smaller-sensor smartphone.

In fact, the image on the left is the one from the Sony a7R III, and the one on the right is from the Pixel 3. Google has used the power of computational imaging to assemble several frames automatically into a very impressive result. The fact that it can be this hard to tell which image is which is a sign of how good smartphone cameras have become in many situations. Results like these induced Guichard to dig deeper into how this became possible, and where both technologies will go from here.

Full-frame camera or smartphone: Image quality questions

The impressive improvement in smartphone cameras leads to three important questions that Guichard addressed in the remainder of his keynote speech:

- How have today’s smartphones managed to close the image quality gap with digital cameras?

- Are today’s smartphones actually better than digital cameras?

- If so, is there still a role for digital cameras?

Bridging the gap: Smartphones caught up by conquering noise

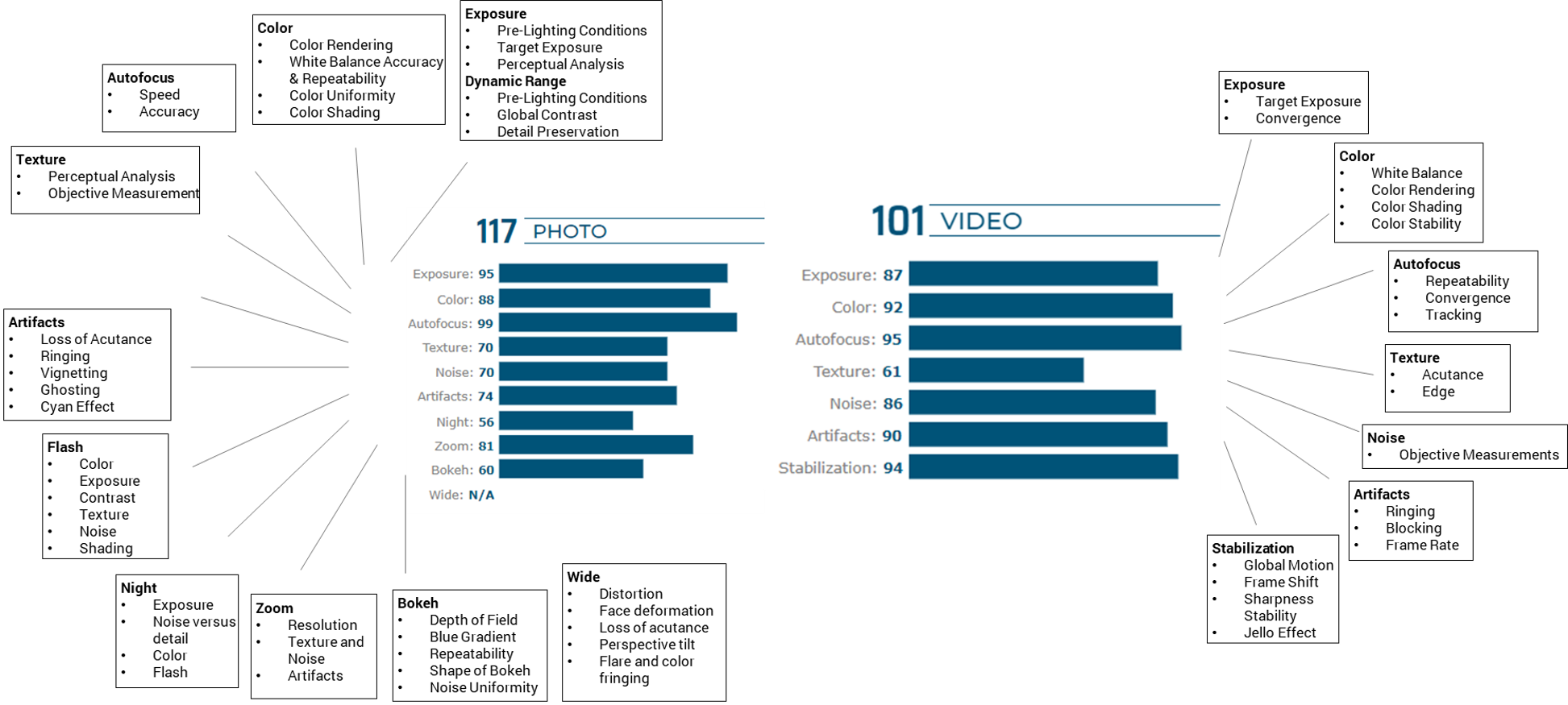

There are dozens of axes on which image quality can be measured, and hundreds of attributes. When we test cameras and sensors at DXOMARK, it requires over 1600 images in a variety of lab and natural environments to get a good measurement of its performance for the most important of these:

Many image quality issues that detract from a camera’s performance are fairly straightforward to correct using automated processing. That includes many kinds of optical distortion, lens shading, and even poor tonal range, as the following examples show:

Lens shading, also referred to as a type of vignetting, is another image defect that can be fixed automatically once a camera system is carefully modeled. The very visible lens shading in the original image below on the right is simple to fix automatically right in the smartphone itself, since the phone’s software knows what lens is being used and how to correct for it.

Even chromatic aberration, a type of fringing effect that is frequently seen in smartphone cameras, has become something that can be largely corrected automatically right in the smartphone:

All of these automatic corrections do require measuring the characteristics of the optic and sensor combinations very precisely. Smartphone makers can do that because they provide the complete system, including the sensor, optics, and image processing pipeline. They also often have access to additional information about the distance to the primary subject or even a depth map of the entire scene. However, one area that has proven remarkably stubborn in resisting improvement is image noise.

Noise represents the toughest challenge

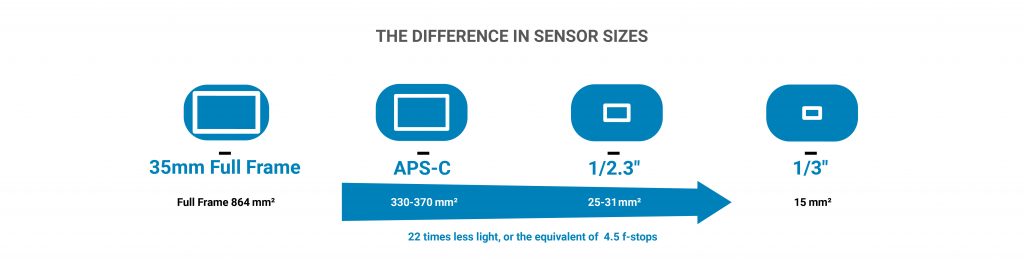

Early smartphones suffered in both resolution and noise due to their small sensor size. Advances in sensor technology quickly began to close the resolution gap with larger cameras, but noise reduction continued to remain an elusive challenge for the smaller-sensor cameras in smartphones. The amount of noise is directly related to the overall amount of light captured in an image (which Guichard describes as the photon flow). Fewer photons means more noise. Since a typical smartphone sensor might receive less than one-twentieth of the photons of a 35mm full-frame sensor for the same exposure time, it is much more prone to noise. That difference in sensor size is the equivalent of a 4.5EV (f-stop) deficiency to overcome.

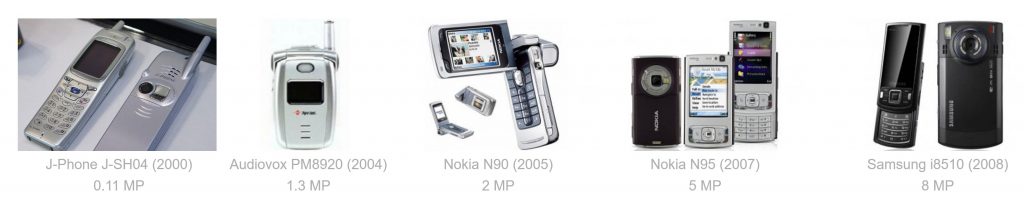

2003–2013: Better technology helped smartphone cameras overtake compact cameras

As the camera and its image quality became a major selling point for smartphones, manufacturers began investing heavily in technology to bridge that 4.5EV gap. For starters, they innovated both by using larger sensors with higher resolution, and by improving their image capture and processing. As the result of these “resolution wars,” in fewer than 10 years—from 2000 to 2008—smartphone sensor resolution increased by more than a factor of ten.

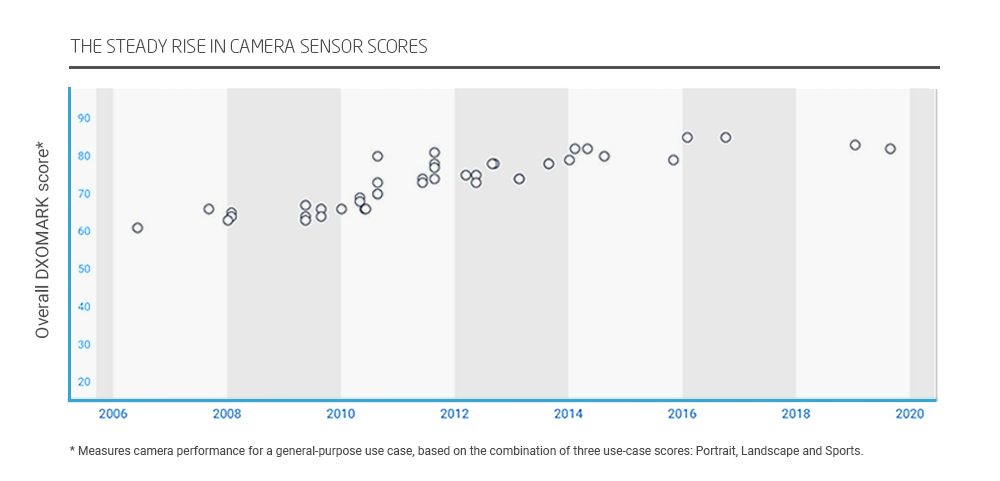

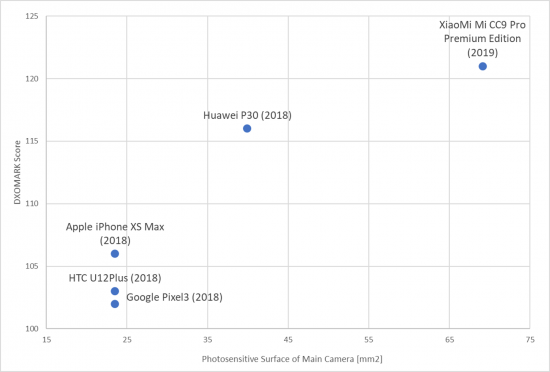

Amazingly, despite the necessarily smaller pixel sizes of the higher-resolution sensors, newer smartphone models managed to eclipse older ones in both sensitivity and dynamic range. Intriguingly, that was only partially because of improvements in sensor technology. Using the results of our testing of APS-C sensors in our labs at DXOMARK over that time as a baseline, we can see that there was roughly a 1.3EV gain in performance for a given sensor size:

By contrast, smartphone image quality increased by over 4EV. So increased sensor resolution and sensitivity was only part of what helped smartphones overtake compact cameras in image quality. An even larger factor was the increased computing power of mobile devices, and the ensuing improvements in image processing. Over the same time that sensors improved by about 1.3EV, digital processing of images produced results that had improved by around 3EV, thanks to an increase of about 100x in processing power and new algorithms.

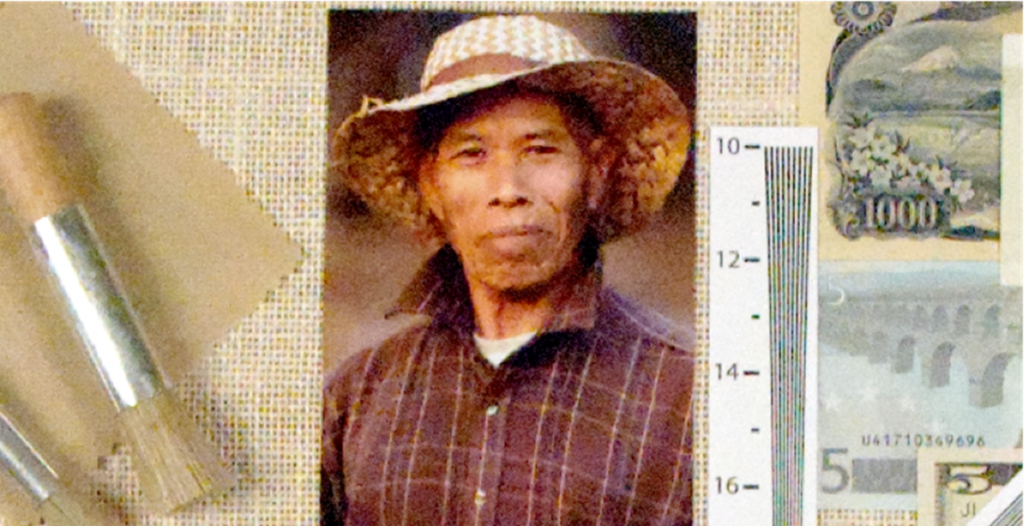

To illustrate these improvements in image processing, Guichard processed a RAW file from his first DSLR—a Nikon D70s. The series of images he presented showed how much image processing pipelines improved over the years after he captured the original image in 2005. Advanced processing techniques developed for use in post-processing RAW files on the computer rapidly found their way into smartphones:

These improvements in image processing account for the roughly 3-stop improvement in smartphone image quality over their first decade of existence. Overall, the combination of around 1.3EV from improvements in sensor technology with the 3EV gain from post-capture technology meant that image quality for a given camera size improved by roughly 4 to 4.5 stops over the decade. The result was that a 2013 smartphone-sized sensor became capable of producing image quality similar to that of an APS-C DSLR from a decade earlier.

2013: Smartphone photography becomes a mass phenomenon

By about 2013, helped along by improvements in image quality and by growth in smartphone sales, smartphone photography rapidly became the most popular way to capture images.

2013 to the present: Bridging the gap – turning the tables on DSLRs?

While the first decade of smartphone innovation saw them catch up to earlier DSLR models and competitive compact cameras, innovation definitely didn’t stop there. Not long after smartphones began to surpass compact cameras for many use cases, the next obvious question was, “Can they also surpass DSLRs and their newly-evolved full-frame mirrorless competitors?” That battle began in earnest around 2013 and 2015, so we’ll look at how those technologies have evolved between then and now.

During those years, smartphones continued to make great strides in image quality, despite a slowdown of progress in basic sensor and optical technology. For example, look at these crops from a standard target image taken with five generations of iPhone:

If progress wasn’t through better sensors and optics, the obvious question is—how was it possible? One solution used to improve image quality and reduce noise was increased exposure time. However, simply leaving the shutter open longer causes a number of issues. First, if the camera isn’t on a tripod, camera motion becomes an issue. To deal with that issue, smartphone makers began deploying more sophisticated optical stabilization systems. However, a stabilization system by itself doesn’t help with the second issue, which is motion by the subject.

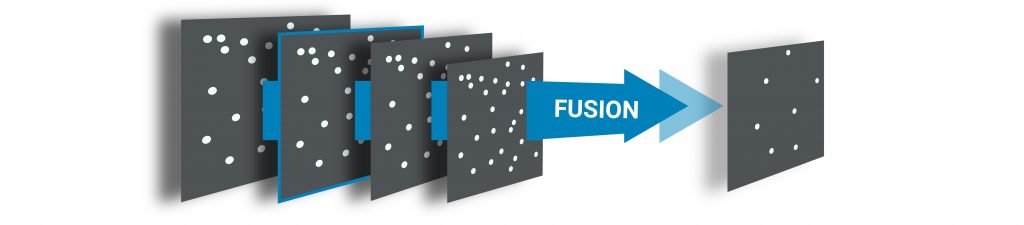

Going beyond image stabilization, smartphone makers also began stacking multiple captures using computational imaging. With sufficiently clever algorithms, that technique allows the creation of lower-noise images with less subject motion. The combination of these two innovations was a major factor in improving image quality on smartphones over the last 5-6 years—but it was only made possible because of the greatly increased processing power of modern smartphones.

Fusing stacked images effectively requires sophisticated software to avoid introducing artifacts, including ghosting. Fortunately, improved algorithms and faster processors have allowed the technique to improve rapidly, which is reflected in much better low-light image quality:

This dramatic improvement in smartphone cameras, thanks to computational imaging and better processors, has been made possible because of their incredible popularity, and the resulting large investment in innovation that smartphone makers are able to make as a result. Over time, all this has allowed smartphones to improve much faster than would have been possible otherwise, given their physical limitations.

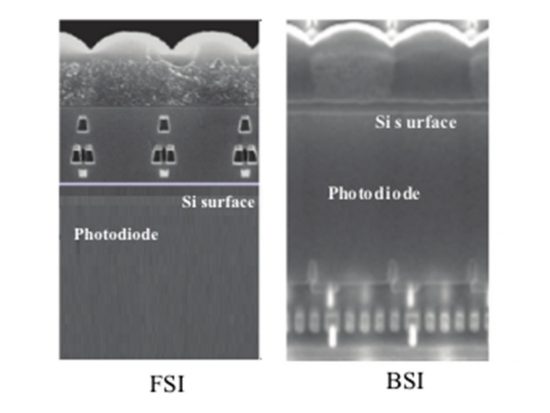

However, in addition to processing improvements, there has been one fundamental hardware innovation that has played an important role in increasing image quality—larger sensors in thin phones. It wasn’t thought possible to increase the sensor size in a smartphone without increasing the thickness of the phone—something manufacturers dread. But the invention of Back-Side Illuminated (BSI) sensors has allowed larger sensors without adding thickness (Z-height) to the phone.

By placing photosites closer to the surface, BSI sensors are able to gather light from more directions. That results in multiple important benefits. First, apertures can be larger, which means more information can be captured in a given exposure, which in turn means less noise in captured images. Second, the lens can be placed closer to the sensor, enabling the use of larger sensors without increasing the thickness of the phone. Finally, flatter lenses can be used, which allows more flexibility in adding additional optical elements and creating longer effective focal-length lenses—also without causing thicker phones.

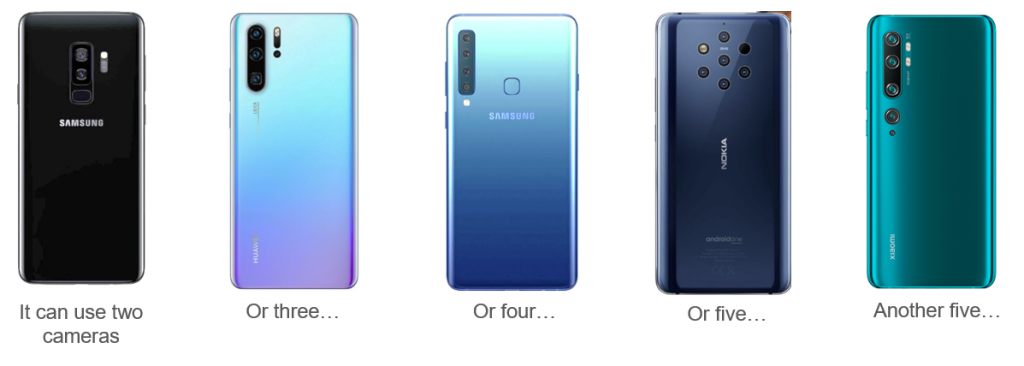

BSI sensors weren’t just the only trick smartphone makers had up their sleeve. They also started using more than one main camera module. Since individual camera modules are so small, it is possible to put more than one of them on the back of a phone. Initially the plan was to use multiple cameras to gather more light and create better images. However, various technical challenges have meant that smartphone makers have instead primarily used additional cameras to provide optical zoom and such specialized shooting modes as black and white, as well as bokeh effects. We’ve seen flagship phones go from a single main camera module to as many as five cameras.

Of course, DSLR makers haven’t been standing still either. So Guichard next took a look at how both technologies have progressed since our 2005 Nikon D70s baseline.

Sizing up the competition: Where are smartphones when compared to today’s modern DSLRs?

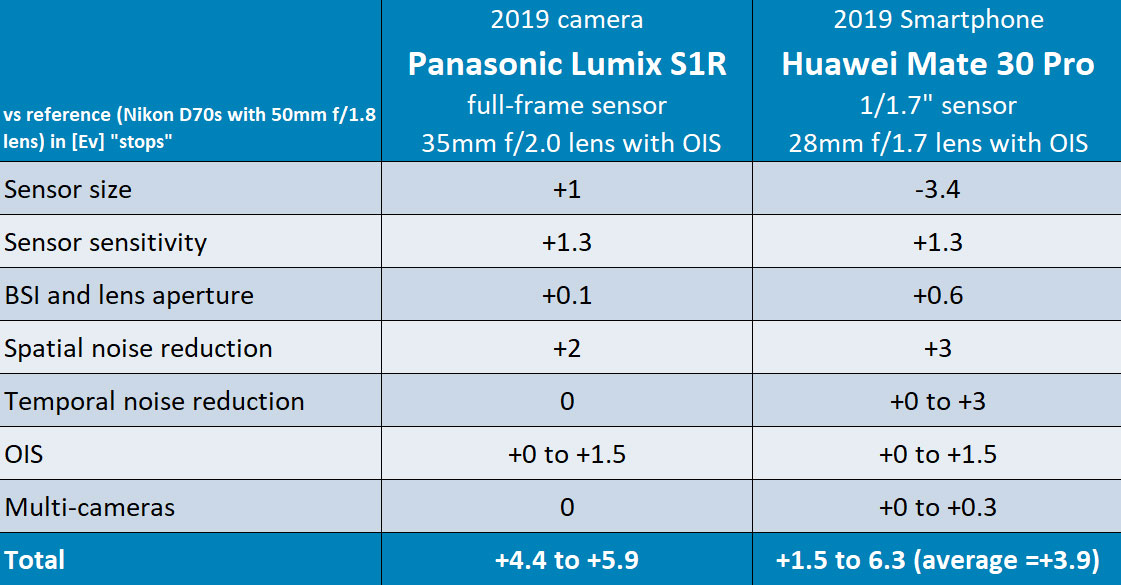

Given all the improvements to smartphone cameras over the last few years, it is fair to ask how they compare to DSLRs and full-frame mirrorless cameras when it comes to image quality. To do that, Guichard constructed a model of how both DSLRs and smartphones have improved since his 2005 Nikon D70s. He categorized the various improvements and provided a rough estimate of the number of f-stops each was able to contribute to better image quality:

The total gain in f-stops shown in the table above is an estimate of how the overall image quality for a 2019 full-frame camera and a 2019 smartphone compares to the baseline of the 2005 Nikon D70s. (Some items have ranges because they are more effective in some situations than others.)

From the table, we can see that in some cases the image quality from a smartphone can actually be better than that from a DSLR. But we can also see that smartphone results are much less consistent, and therefore it isn’t possible right now to trust our smartphones to always provide a quality image.

Testing the image quality of a full-frame camera versus smartphones

To validate the noise results shown above and to benchmark other important image quality attributes—and thus see how a full-frame mirrorless camera compares with modern smartphones, DXOMARK tested a current model, the Panasonic Lumix S1R, using the same extensive test methodology it employs when testing smartphones. Guichard then compared the results to several current flagship smartphones.

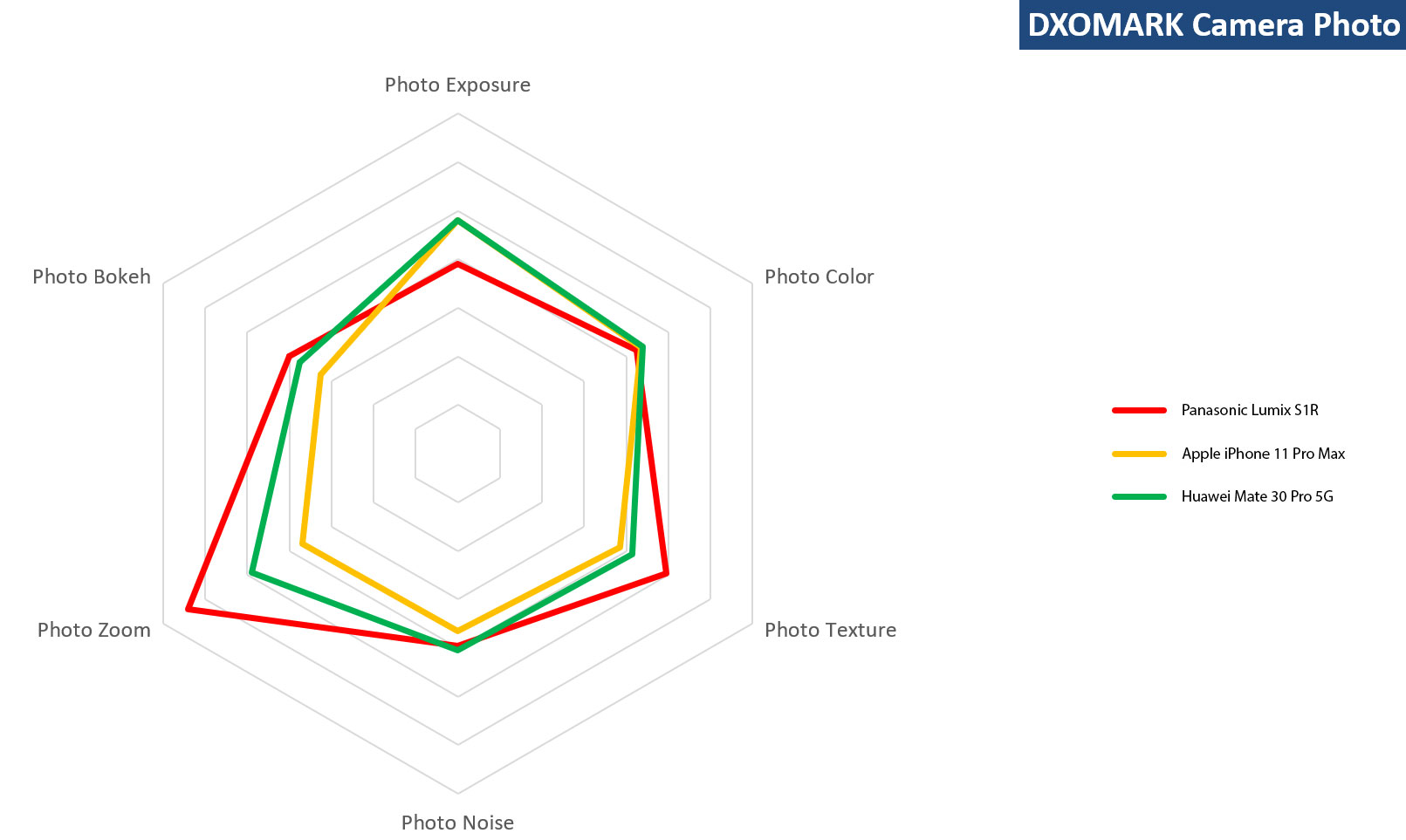

For relative scoring, Guichard used some of the Photo sub-scores from the DXOMARK Camera test suite:

Eyeing the results, it’s clear that the comparison comes up with a split decision. Let’s take a look at some of the specific test images to get an idea of the strengths and weaknesses of the mirrorless cameras and the smartphones. (The smartphones used in each comparison were the ones that fared best in that particular test.)

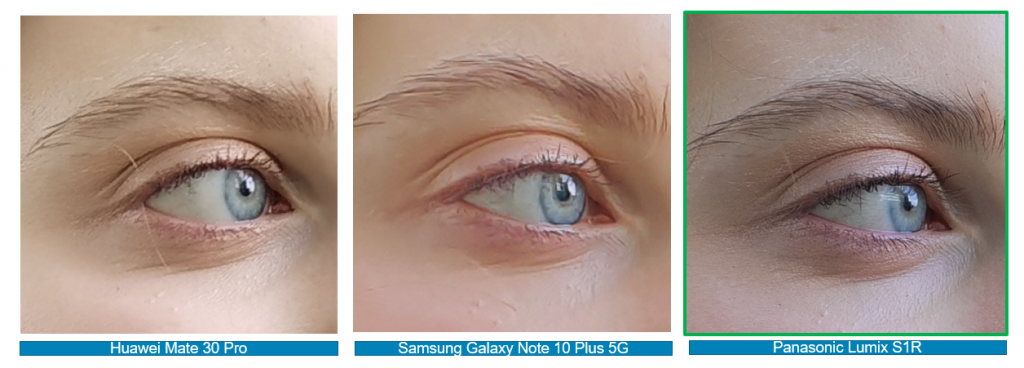

Testing detail preservation / texture: Guichard looked at sample images shot under both well-lit and night-time conditions, as low-light image capture was traditionally a weakness of smartphone cameras. First we show at the full image to give you a sense of context, and then show a tight crop of the woman’s eye:

Using another set of tight crops, Guichard showed that smartphones have now also gotten very good at preserving textures, even in night scenes. Again, first we’ll show the full scene for reference:

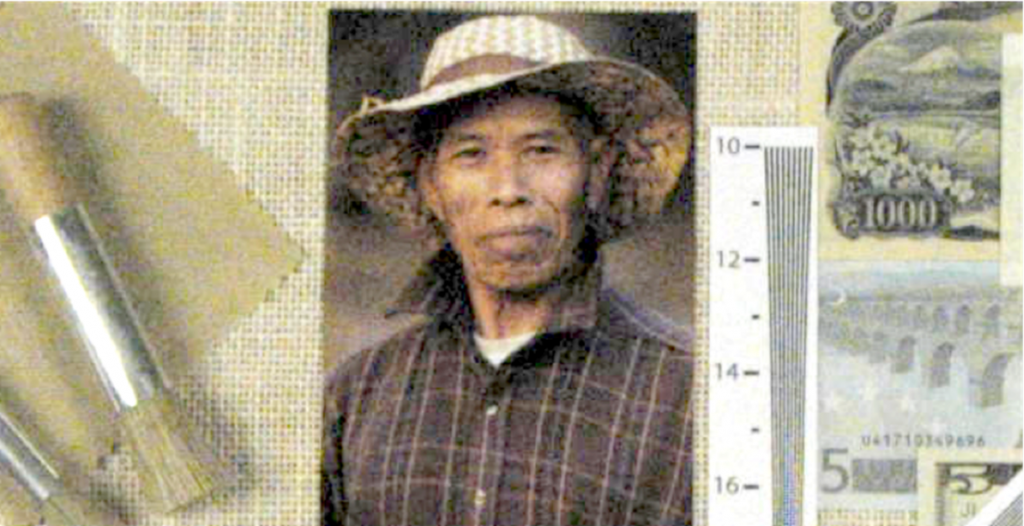

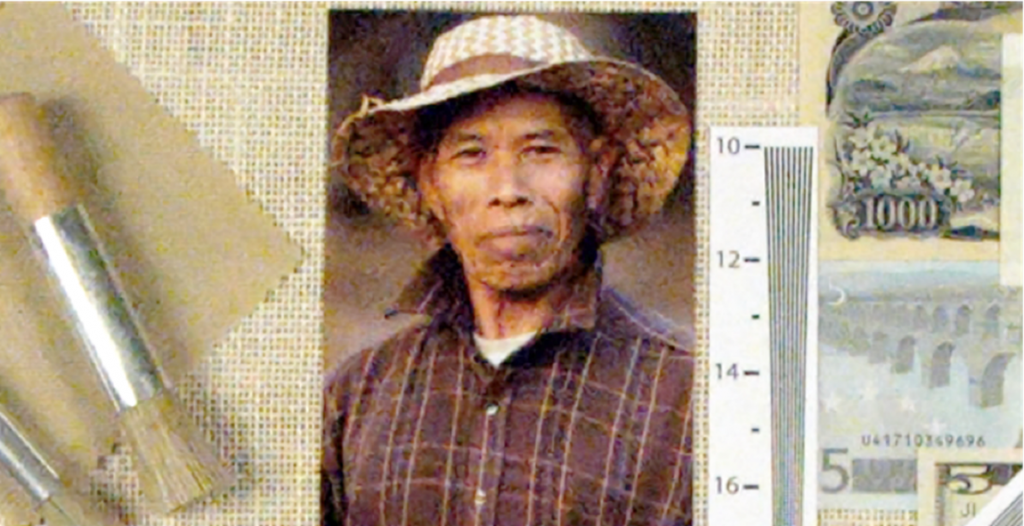

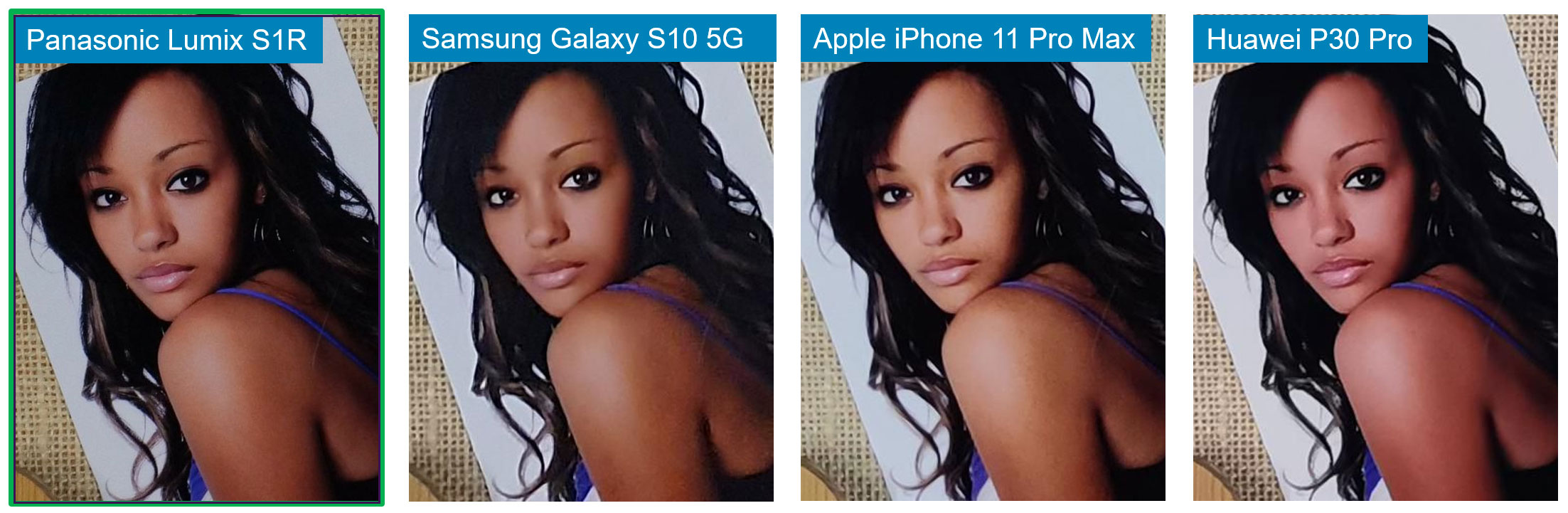

Testing noise: The native capability of the larger-sensor DSLR still puts it on top when we look at this set of tight crops from a standard DXOMARK indoor test scene, but by a remarkably thin margin, especially given how much smaller the smartphone sensors are. The results of looking at these crops agrees with the bottoms-up comparison from our table above. First we’ll show the entire lab test scene, followed by illustrative cropped sections:

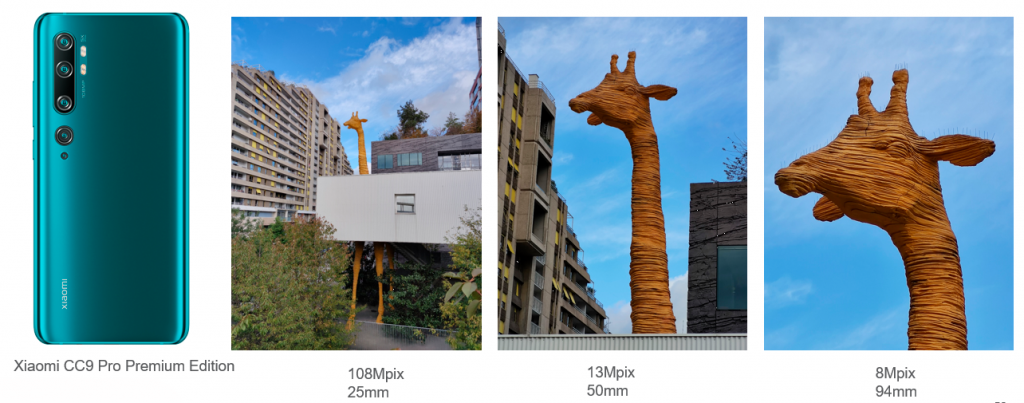

Testing zoom: For the Zoom comparison, Guichard chose the multi-camera module Xiaomi CC9 Pro Premium Edition. Its two telephoto cameras give it the best long focal-length zoom of any smartphone DXOMARK has tested:

The super-telephoto from the Xiaomi is compared in this case with a 35mm lens on the mirrorless camera, which doesn’t take into account the fact that the camera photographer has a wide range of telephoto lens options to choose from. You can see from the comparison images below that even without a specialized telephoto lens, the Panasonic still has an edge in Zoom performance, but the Xiaomi does a remarkably good job considering the tiny size of its camera modules.

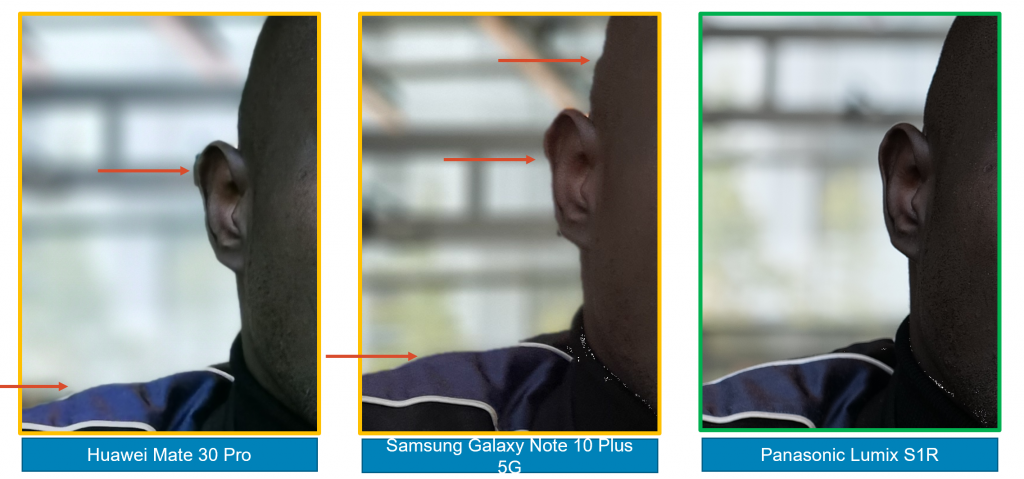

Testing bokeh: Another area where full-frame cameras have traditionally had the edge over smartphones is bokeh. The shallow depth of field that is possible with a larger sensor, and the optically-determined bokeh effect in out-of-focus areas have made larger-sensor cameras a must-have for portrait photographers. However, smartphones have begun using their computing power and additional sensor technology to calculate depth maps for portrait images and to synthesize bokeh effects to mimic the performance you’d get with a purely optical system.

While smartphones do increasingly well in processing simple portraits such the woman on the bridge (above)—“simple” because the foreground is nicely separated from the background—more complex situations can still result in unpleasant artifacts. In the example below, where we first show the entire image and then a crop around an area where the bokeh effect is important, you can see that the smartphones did not properly identify the man’s ear:

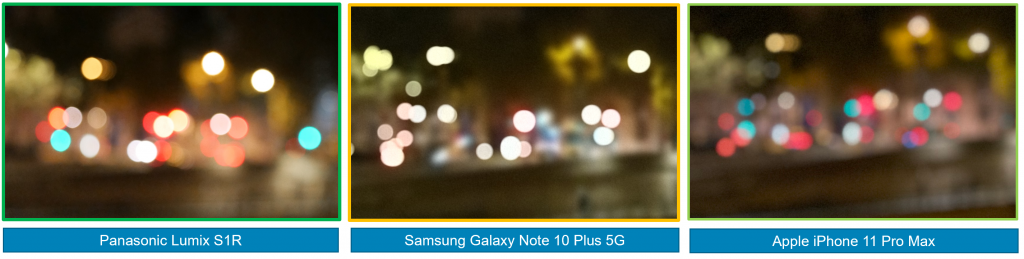

In addition to the problem of artifacts when synthesizing bokeh, the quality of the bokeh effect itself is also a problem for smartphones. Here you can see that while the full-frame camera has natural blur with proper color and shape, the Samsung phone has blur with the proper shape but little color, while the iPhone preserves the color but has an elliptical shape. First we show the entire image, then tight crops on the lights along the street:

Testing HDR: Intuitively, we would expect that the larger sensor of the Panasonic would give it a large advantage in rendering high-dynamic-range scenes. However, this often isn’t the case when compared with modern smartphones, as is shown fairly dramatically in this indoor sample scene:

Given the impressive rendering of the HDR scene with a smartphone, it is fair to ask how it is possible for smartphones that are limited to 10 bits of dynamic range are able to outperform 14-bit full-frame sensors in many situations when capturing high-contrast scenes. The answer is straightforward: the full-frame camera is by default simply capturing the scene with a single frame and rendering the way it appears. For a photographer who has taken the time to carefully arrange the lighting of a scene, that is exactly what is desired. However, even without that kind of preparation, it is easy to see the potential in the full-frame camera’s sensor if instead you shoot HDR scenes in RAW and then use post-processing software to bring all parts of the image into view:

Is there still a role for digital cameras?

Having looked at the impressive trajectory of smartphone image quality, Guichard then addressed the logical follow-on question of what, if any, role remains for standalone digital cameras—in particular, for the DSLRs and mirrorless models still favored by most professionals and many active amateurs. If smartphones are so good, why are millions still using larger, heavier, more expensive alternatives?

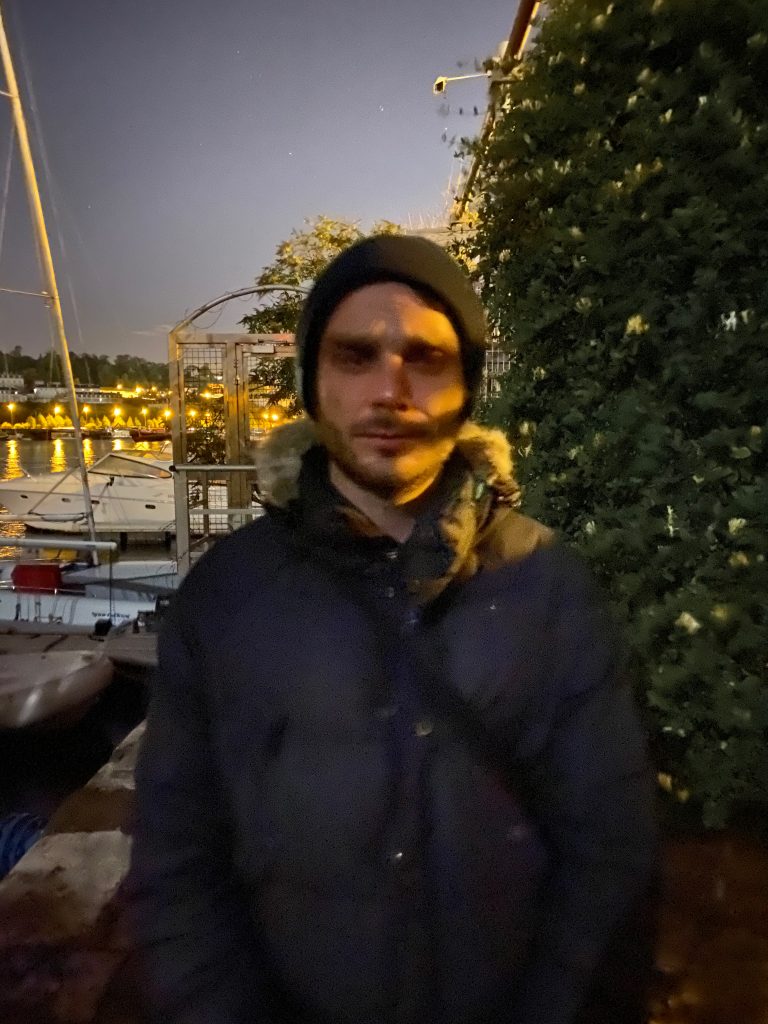

For Guichard, the secret to the longevity of digital cameras is trust. In the hands of someone who knows how to use it, a DSLR can be relied on to render a scene in the way the photographer envisions. An experienced photographer can also learn the limits of their DSLR and know that if they stay within those limits they can achieve repeatable, quality results. Smartphone algorithms, no matter how clever, are still susceptible to making mistakes, or of simply misunderstanding the intention of the photographer.

It’s not hard to find smartphone photo fails like those shown above. Unfortunately, it is hard to predict when they will occur. So for situations where a photographer needs to be sure they will capture the images they need, a standalone digital camera is usually still the tool of choice. That contrasts with smartphone photography, where ease of use is paramount, settings are already chosen, and the photographer is free to simply focus on the content—but results can be hit or miss, and control is limited.

Digital cameras let the photographer tell a story

Photography as a craft isn’t just about capturing reality, it is about telling stories. To do that, photographers rely on the creative freedom they get from a versatile camera that is built to be used in that fashion. Not only do DSLRs and mirrorless cameras feature a wide array of settings, they are designed ergonomically so that those settings can be changed quickly and precisely by someone who has taken the time to learn to use them. In addition, of course, a wide array of lenses and accessories make standalone cameras uniquely versatile tools.

In contrast, smartphones usurp much of the photographer’s creative control, and can sometimes ruin the story a photographer is trying to tell. The following image of Burmese fishermen provides a good example of how a DSLR provides the type of dependable creative control that helps a photographer tell a story:

The photographer has deliberately chosen harsh lighting conditions, with the sun backlighting the subject. Desiring only a silhouette of the fishermen, the image was deliberately underexposed, allowing the sun and sky to retain detail and color that would otherwise be blown out. That also helps provide isolation of the subject against the dark water. There are certainly some minor technical corrections that we can make to the original image, such as correcting the horizon angle and correcting for the specific optics used:

So far, well and good. But instead, if we imagine what a smartphone would have done with the image, we get a much different result. It would likely interpret the scene as a backlit portrait, and use a combination of a brighter exposure and local tone mapping to try and correct for what it decided were problems with the photo. The result would look something like this:

Beyond automating the camera, automating the photographer

As with most decisions about photography, there isn’t a single right choice when it comes to determining what types of creative control to delegate to the camera and which to keep under the purview of the photographer. Modern smartphones continue to break new ground both in replacing what a photographer can do with camera settings, and in going beyond them to areas that were previously solely the realm of the photographer’s own creativity. To go further, smartphones have begun to guess the intentions of the photographer. Two examples of this are the “beauty” enhancement capabilities integrated into some smartphones, and features that help press the shutter at the right instant when photographing people:

Leaving image capture aside, there is another area where its streamlined workflow helps smartphone photography provide automation benefits to the photographer. Beyond assisting with image creation, smartphone photographic ecosystems now include a large number of automatic backend processing features to make the overall photography experience even more painless.

Innovations in automating photographic workflow using AI, cloud resources, and the unique capabilities of smartphones are happening almost too fast to chronicle. By combining location information, object recognition, AI-based image quality evaluation, and bursts of frames, systems such as the Adobe Cloud, Apple’s iCloud, and Google Photos are able to offer automated image tagging, face recognition, album creation, best shot selection, and suggested shareable stories. The overall effect is that smartphones are essentially curating our memories for us, which is a long way from a photographer crafting a story and employing their camera simply as a straightforward tool for capturing the needed images.

DSLR owners can shoehorn their images into those systems—although often without all the metadata needed for full functionality—but it is an area where smartphone vendors are moving much more quickly than camera vendors. Combined with their advances in image quality, these innovations are bringing smartphones very close to delivering on one of the first promises made to consumers by the photographic industry.

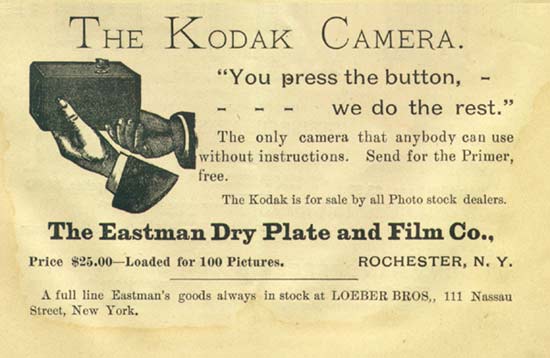

Smartphones: Delivering on Kodak’s original promise

George Eastman developed the first consumer camera in 1888. This camera came equipped with a 100-shot roll of film; the photographer simply clicked the shutter, wound the roll forward, and then sent the entire camera back to the factory when it ran out. In return, they’d receive prints of their images and a newly-loaded camera. Along with the camera came the promise, “You press the button, we do the rest.” For many if not most people, modern smartphone photography has finally delivered on that 130-year-old promise.

Memory maker or storyteller?

We have seen how advances in technology have helped smartphone cameras surpass compact cameras in image quality and many other capabilities over the course of their first decade, despite their small size. In their second decade, taking advantage of their unique computational imaging capabilities, they have begun to push past even DSLRs in many areas, including automatic image enhancement and organization. However, that automation also makes smartphone cameras harder to predict, and difficult to rely on for repeatable results— leaving a market role for more-traditional digital cameras.

The landscape photographer Ansel Adams once said, “There are always two people in every picture: the photographer and the viewer.”

As Guichard sees it, photographic technology will eclipse the role of the photographer for many, but not for all, photographers:

“Smartphones have already completely automated photography. The next logical step is to automate the photographer. This is good: people can enjoy a personal digital photographer to capture their memories. But there will always be those who would rather have a trustworthy tool to tell their own stories.”

While smartphones are increasingly good at painlessly capturing memories, and even turning them into shared experiences, some photographers will always want to tell their own stories and keep creative control of their images. Standalone digital cameras such as DSLRs, mirrorless, and of course larger formats allow them to do that. So, at least for the indefinite future, they have a place in the hearts and minds of many.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.