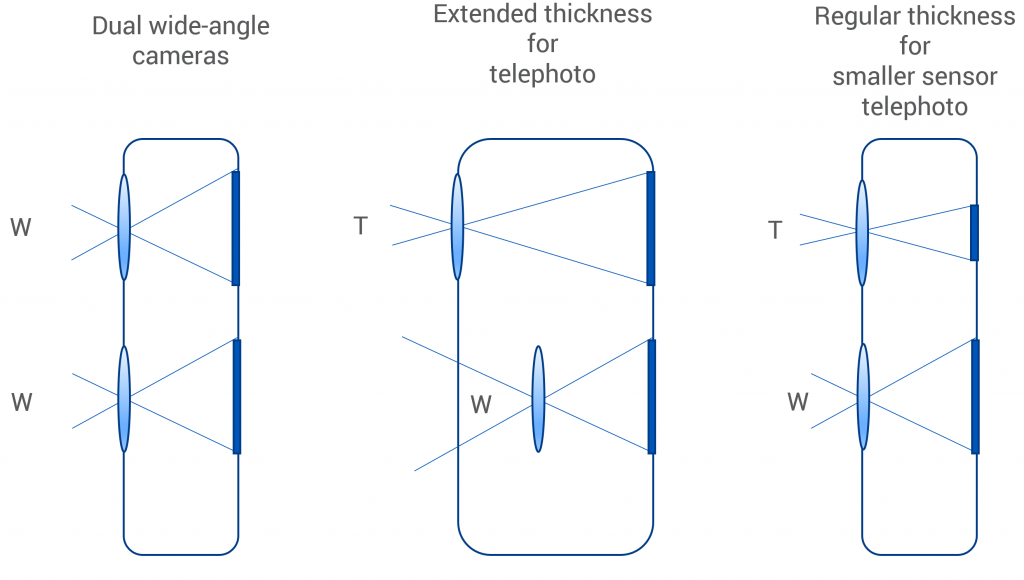

With the growing importance of camera performance to smartphone makers and users, manufacturers have worked hard to add features and to improve image quality. However, users also want thin phones, which greatly limits the size of the individual camera modules that can be used. The small Z-height, as the industry refers to the thickness of a phone, has caused designers to make use of the larger width and height of phones by adding additional cameras to their designs.

Having multiple cameras has made an array of new features possible—zoom, better HDR, portrait modes, 3D, and low-light photography—but it has also presented new challenges. We’ll take a look at how multiple camera phones have evolved, at how they’ve improved the photo experience for phone owners, and at the challenges that phone makers have had to tackle to make them work. In each case, it is important to keep in mind that it is still early in the development of multiple-camera smartphones, so expect to see rapid progress in the technology and the features it enables over the coming years.

Telephoto and zoom

Until the introduction and market success of the Apple iPhone 7 Plus in 2016, zooming in on a smartphone was almost always entirely digital. Starting with Samsung’s Galaxy S4 and S5 Zoom, a few specialized phones had an optical zoom (made possible by their bulky design), but most popular models offered only a limited-quality, digital zoom capability that used some combination of cropping and post-capture resizing.

The physics of lens design make it very complex to fit a zoom lens into the thin body of a high-end smartphone. So over the last two years, almost all flagship phones have moved to a dual-lens-and-sensor design for their rear-facing camera instead of trying to add an optical zoom. Most—including Apple, OnePlus, HTC, Xiaomi, Motorola, Nokia and Vivo models—use a traditional camera module paired with a 2x telephoto module, although the Huawei Mate 20 Pro and P20 Pro make use of a 3x telephoto.

The most obvious advantage of having a dedicated telephoto camera module is better images at long focal lengths. At the native focal length of the telephoto camera, the camera uses a typical pipeline to process and render the image at the sensor’s native resolution. This provides a better result than cropping and scaling the image from the main camera.

Images from the two camera modules can be blended to create improved results even at non-native focal lengths, but this presents some unique image processing challenges. For example, the preview image displayed to the user is taken from one camera module or the other, but needs to switch smoothly between the two modules as the user zooms in or out. For that to happen, the images from the two modules need similar exposure, white balance, and focus distance. Because the two modules are slightly offset from each other, the preview needs some realignment to minimize the shift in the image when the phone switches from one camera module to the other. The results are definitely not as seamless as when using a dedicated zoom lens on a standalone camera, but it’s amazing that it’s possible at all for such a small device.

The next step in zoom: Folded optics

In the same way that it is difficult to fit a zoom lens within the thickness of a phone, the longer focal lengths of telephoto lenses require a combination of clever lens design and smaller sensors to fit. This means that the thickness of the phone also limits lens focal lengths when they are oriented in the usual fashion—that is, facing out from the phone’s surface. As a result, phone makers have been able to achieve only 2x or 3x optical zoom by using the traditional sensor-lens orientation in a smartphone.

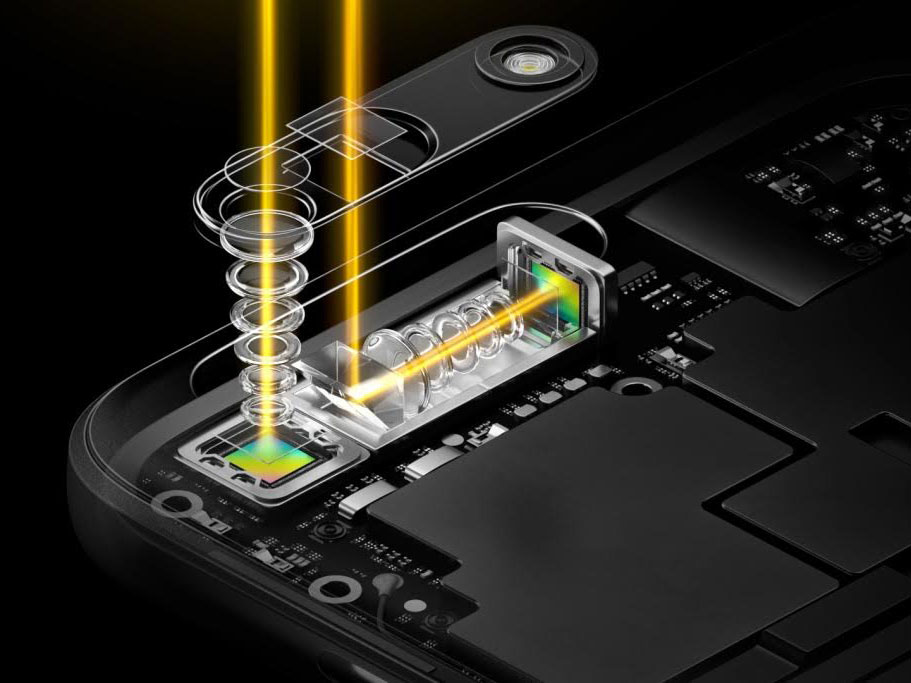

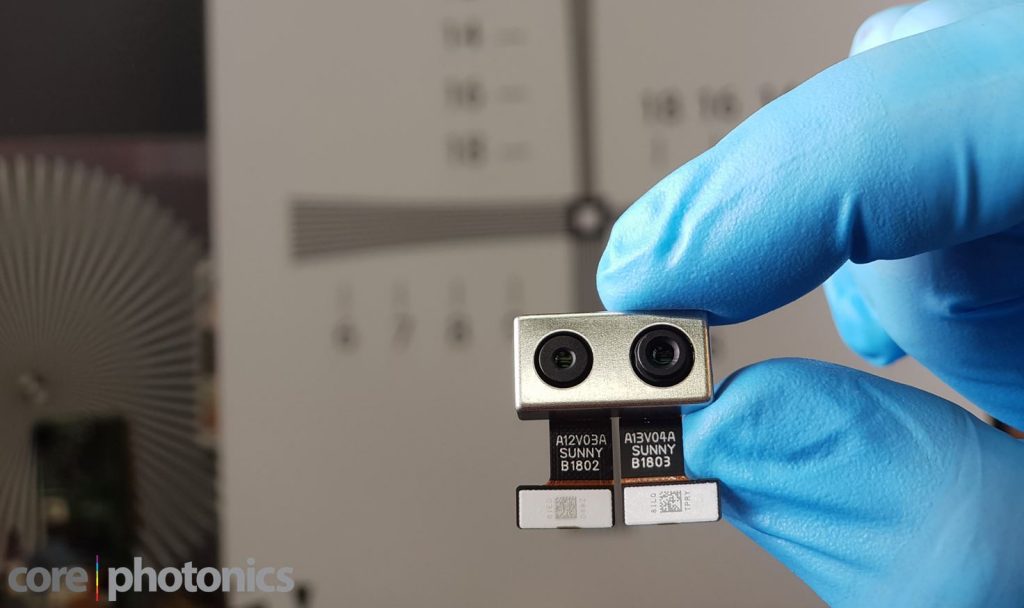

Folded optics is a way to overcome the focal length limit that the phone’s thickness imposes. The camera module sensor is placed vertically within the phone and aimed at a lens with an optical axis that runs along the body of the phone. A mirror or prism is placed at the correct angle to reflect light from the scene into the lens and sensor. Initial designs have a fixed mirror suitable for dual-lens systems such as the Falcon and Hawkeye products from Corephotonics, which combine a traditional camera and a folded telephoto design in a single module. But designs from companies such as Light.co, which use movable mirrors to synthesize the images from multiple cameras, are also beginning to enter the market.

Zooming out: Wide-angle capability

While not as much in demand as telephoto capability, photographers moving from standalone cameras also missed the ability to shoot wide-angle photos. LG was a pioneer in adding a second, ultra-wide camera to phones as far back as its G5 in 2016, and Asus followed suit with an ultra-wide camera in its Zenphone 5. More recently, LG and Huawei have shipped models featuring a triple rear camera that includes traditional, telephoto, and ultra-wide lenses.

Better telephoto and wide-angle images are only the beginning of what smartphone makers have accomplished with the addition of a second camera module.

Portraits, depth estimation, and bokeh

The longer effective focal length of the telephoto camera also makes for less distorted faces when shooting portraits, as you can photograph the subject from twice as far away for the same framing, removing some of the unpleasant effects of photographing people close-up using a wide-angle lens.

By having two slightly offset cameras, it is also possible for the phone to estimate the depth of objects in the scene. This process starts by measuring how far apart objects are in the images from the two cameras—an effect called parallax. Objects close to the cameras will be quite far apart in the two images, while objects further away will appear much closer together. You can see this for yourself by holding up a finger in front of your face and noticing how much it appears to move when you look at it first with one eye closed and then the other, compared to a distant object that hardly seems to move at all.

Adding the ability to estimate the depth of objects in a scene allowed the introduction of specialized Portrait modes in multi-camera phones to keep the subject sharp, while giving the background a pleasing blur. Standalone cameras with wide-aperture lenses automatically do this because of how the optics work, but the smaller smartphone sensors require in-camera post-processing of the images to achieve a similar effect.

Smartphone makers have had to solve several big challenges to create believable Portrait modes, though. First, the simple way depth is measured means the camera can be fooled, resulting in accidental blurring of the subject, or leaving objects in the background sharp. Second, bokeh—the natural blurring achieved by using wide-aperture optics—is surprisingly hard to replicate computationally. That makes creating a pleasing blur for the background difficult. Third, each of the multiple cameras has a slightly different perspective, and perhaps different shutter speeds, so the careful alignment of the images and removal of ghosting artifacts is an ongoing issue. Finally, as with most of the applications for multiple cameras, the two cameras need to be carefully synchronized to ensure that both images are taken at the same instant so as to minimize any motion artifacts.

While having two complete cameras may be the best way of achieving these effects in a smartphone, it isn’t the only way. Google’s Pixel 2 and Pixel 3 phones, for example, use the dual pixels on their Sony sensors to read images from both the left and the right halves of its pixels separately, essentially creating two virtual cameras that are slightly offset. The image created from the left side of the pixels is shifted by a mere millimeter in perspective from the right, but that is enough for Google to use the differences between the two of them as an initial estimate of depth. The phones then combine a burst of images with some clever AI to generate a more accurate depth map and then to finally blur the background.

Adding a second camera to enhance image detail

Because camera sensors don’t record color on their own, they require an array of pixel-sized color filters over them. As a result, each pixel (a.k.a. photosite) records only in one color—usually red, green, or blue. Pixel output is combined in a process called demosaicing to create a usable RGB image—but this design entails a couple of compromises. First is a loss in resolution caused by the color array; and second, since each pixel receives only some of the light, the camera isn’t as sensitive as one without a color filter array. So some smartphone models, such as the Huawei Mate 10 Pro and P20 Pro, have a second monochrome sensor that can capture and record in full resolution all the available light. Combining the image from the monochrome camera with the image from the main RGB camera creates a more detailed final image.

While a second monochrome sensor is ideal for this application, it isn’t the only alternative. Archos does something similar, but uses a second, higher-resolution RGB sensor. In both designs, because the two cameras are offset, the process of aligning and combining the two images is complex, so the output image typically isn’t as detailed as the higher-resolution monochrome version, but it is an improvement over an image captured with a single camera module.

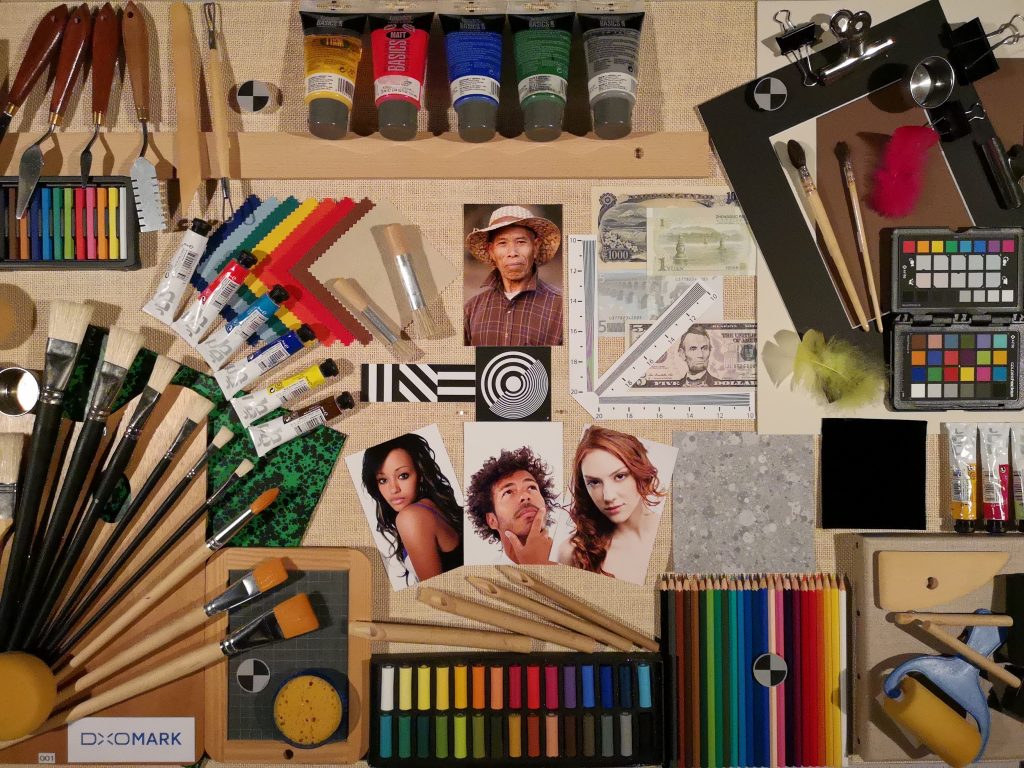

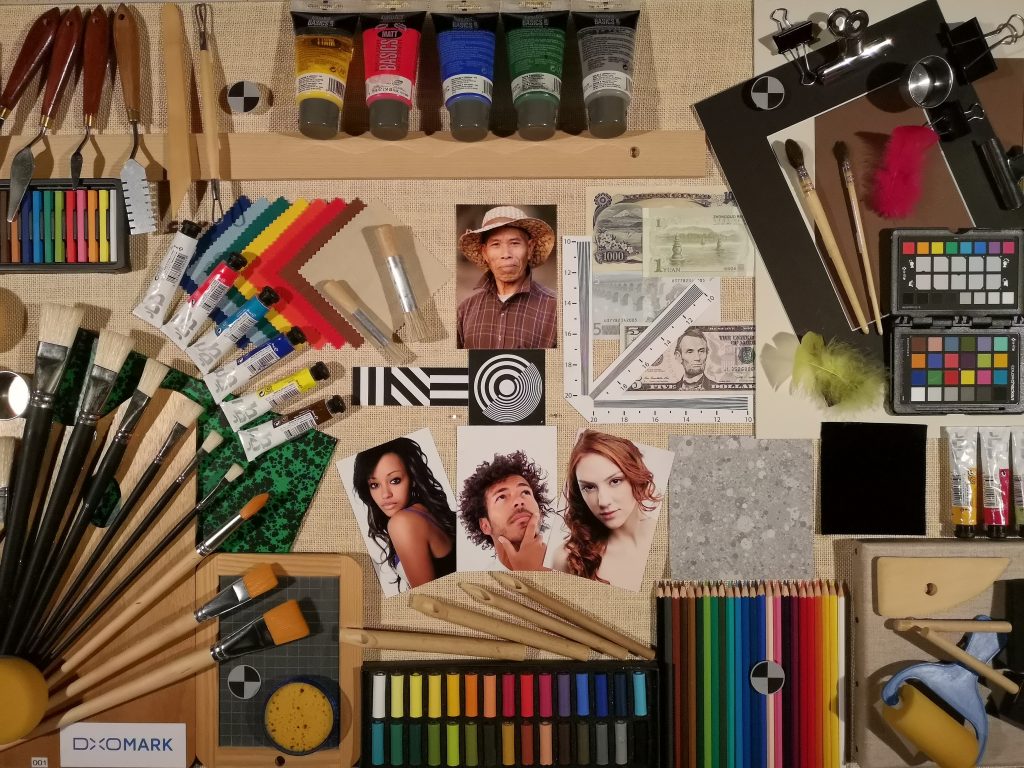

Another way to use an additional camera module is to combine the image from the more light-sensitive monochrome camera with the full-color image from the RGB camera to provide better images in low-light and high-contrast scenes. Here, too, artifacts resulting from the alignment issues between the two images create challenges for implementing this imaging pipeline. Looking at the accompanying example images, you can see that while the addition of the monochrome sensor improves the image in any lighting condition, the difference is more noticeable in low light.

Augmented reality (AR) applications

Once a phone has used the differences in images from its cameras to create a map of how far things are from it in a scene (commonly called a depth map), it can use that map to enhance various augmented reality (AR) applications. For example, an app could use the depth map to place and display synthetic objects on surfaces in the scene. If done in real time, the objects can move around and seem to come alive. Both Apple with ARKit and Android with ARCore have provided AR platforms for phones with multiple cameras (or in the case of Pixel phones, devices that can create depth maps using dual pixels).

However, a second photographic sensor isn’t the only way to use multiple camera modules to measure depth: as far back as 2014, HTC shipped a model with a dedicated depth sensor. Over time, dedicated depth sensors using Time of Flight (TOF) or other technologies may prove to be a more efficient way to generate the relatively granular depth maps needed for AR, but that, too, would be another example of using multiple camera modules to address an emerging application for smartphones.

Module suppliers step up with multi-camera solutions

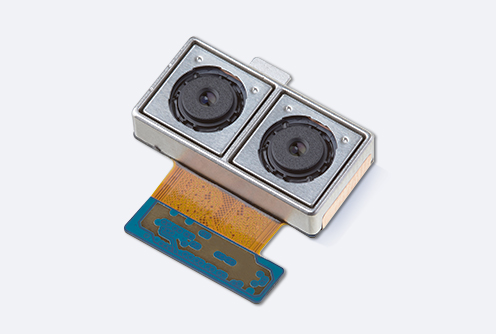

Makers of camera modules have made it much easier for phone manufacturers to add multiple camera module solutions to their designs. In addition to such technology providers as Corephotonics and Arcsoft, camera module vendors, including Samsung Electro-Mechanics, Sunny Optical, O-Film, Foxconn Sharp, Q-Tech, LuxVisions, and others, offer an array of solutions for various combinations of multiple cameras in a single module, complete with image processing libraries. So expect to see multiple camera modules in an increasing number of new phones over time.

Using multiple cameras to capture stereo and light fields

In the same way that our two eyes provide us with a stereo image of what is in front of us and allow us to create a 3D model of the scene, a phone with two aligned cameras can use them to create a stereo image. Phones with stereo cameras go back as far as the 2007 limited-distribution Samsung SCH-B710. HTC, LG, Sharp, and ZTE have also produced models. Those phones, and quite a few others, have shipped with stereoscopic (3D) displays for viewing the resulting 3D images.

To work well, stereo capture using a smartphone camera has to address the complications stemming from the phone’s small form factor. Unlike dedicated stereo capture devices that separate their cameras by a distance similar to that between our eyes, the two camera modules used on a smartphone are typically very close together, giving them a very small baseline. Thus creating believable images that mimic the way our two eyes would have captured them presents an additional challenge for these companies to solve. The two cameras also need to be carefully synchronized so that they don’t produce visible artifacts from capturing the two images at slightly different times.

Camera and now phone maker RED has pushed this concept even further by using stereo cameras on its Hydrogen One phone, along with specialized software from HP spinout Leia, to create depth for the two different orientations of the device, along with a synthetic bokeh effect. The result is images with 16 possible views. The company calls the technology 4-View because it supports four different views in each direction, with highlights carefully mapped between views as needed. Users can then view these images (and video) on the phone’s specialized “4V” display, also powered by Leia technology. As users move their head or the phone, they see different views. When 4-View works well, the result is a very immersive experience that doesn’t require a special VR (virtual reality) or AR headset to view.

The 3D images are generated in the following way: the two cameras of the Hydrogen phone capture a standard stereo pair of images. Then, a total of four images are computed from the stereo-pair, each corresponding to 4 virtual points of view, to be used by the 3D display. The animated GIF below gives you an idea of what the final result looks like on the Hydrogen phone and its Leia-powered Lightfield display.

Additionally, Leia has developed a simulated bokeh effect for the Hydrogen phone 3D images. What’s tricky is that the bokeh effect needs to be consistent across the 4 generated views. With the current version this does not always work reliably but when it works, it looks really immersive.

More cameras require a lot more processing power

Space and cost aren’t the only constraints on how many cameras can be packed into a smartphone. Processing power is also a limiting factor. Processing multiple image streams is substantially more complex than working with captures from a single camera. Not only do all the images have to be processed as they would normally, but additional work is needed to align them properly, to merge them in a way that minimizes artifacts, and then to perform whatever specialized actions are needed, such as creating bokeh or tone-mapping for low light, to create the final photograph for display.

Looking ahead: Expect more cameras on future phones

As smartphone makers compete to outdo each other with additional photographic capabilities, and to extend the power of their cameras into such new realms as AR, we’re likely to see additional camera modules on both the fronts and rears of future devices. Advances in software make it increasingly possible for several smaller cameras to do the work of one larger camera, and the small size and thin shape of smartphones (and mobile devices in general) make the use of small modules a necessity.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.